| PolarSPARC |

Introduction to Apache Drill

| Bhaskar S | 10/14/2022 |

Overview

In any typical Enterprise, data is being continuously generated by the various systems (that are spread across) in different formats (csv, json, parquet, etc) and being persisted in different data stores (nosql, relational, s3, etc). In order to make these various data sets accessible to the data analysts, engineers, and scientists, they are run thorugh a complex set of ETL (Extract, Transform, and Load) processes for storage into a big data analytics repository (aka data lake).

Rather than running a complex ETL processing plant to move the various data sets to the Enterprise lake from the different data sources, is there an easier way for users to access and perform adhoc queries or analysis on these data sets ???

This is where Apache Drill comes in handy !!!

Apache Drill is a high-performance, low-latency, open-source distributed query engine, which enables in the exploration of data that is in either structured and/or semi-structured, using the industry standard ANSI SQL query language.

It allows one to seemlessly aggregate data from various sources (including data files - csv, json, parquet) as well as data stores (hadoop, nosql, relational, s3) for querying and analysis.

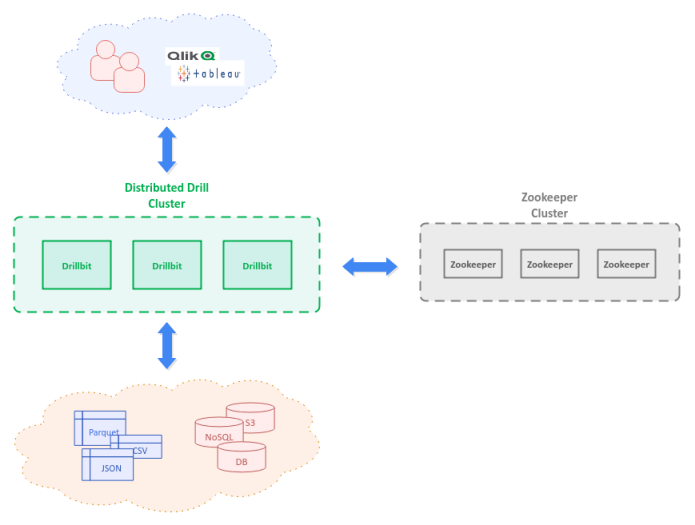

The following illustration depicts the high-level architecture for Apache Drill deployment:

The query engine of Apache Drill is referred to as the Drillbit. The distributed cluster of Drillbit nodes is managed through Zookeeper.

Requests from the user community (via query tools or BI tools) goes via the distributed Drillbit cluster, where one Drillbit node is choosen as the gateway for all requests and responses (referred to as the Foreman).

Drillbit will parse the user query, create a logical execution plan, run through the query optimizer, and finally convert to a physical plan for execution.

Installation and Setup

The installation will be on a Ubuntu 22.04 LTS based Linux desktop. Also, let us assume the logged in user be polarsparc with the home directory at /home/polarsparc. We will also assume that docker has been installed on the system.

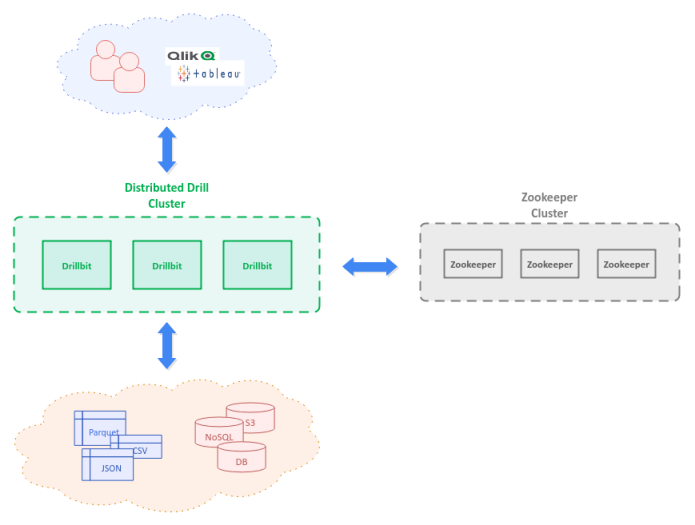

We will create a directory structure as depicted below:

To create the desired directory structure, execute the following commands:

$ mkdir -p $HOME/drill $HOME/drill/docker $HOME/drill/conf $HOME/drill/data $HOME/drill/log

$ mkdir -p $HOME/drill/data/csv/D1 $HOME/drill/data/csv/D2

$ mkdir -p $HOME/drill/data/parquet/D1 $HOME/drill/data/parquet/D2 $HOME/drill/data/parquet/D3

We will need to build a custom docker image for Apache Drill with the flexibility to run specific commands and to be able to mount volumes.

The following is the Dockerfile located in $HOME/drill/docker:

# Base OS/JDK image to use

FROM adoptopenjdk/openjdk11:ubuntu-slim

# Metadata

LABEL Version="1.0" \

Author="Bhaskar.S" \

Email="bswamina@polarsparc.com"

# Enviornment variable(s)

ENV DRILL_VERSION=1.20.2 \

DRILL_USER=drill \

DRILL_GID=1000 \

DRILL_UID=1000 \

DRILL_HOME=/opt/drill \

DRILL_PID_DIR=/opt/drill \

DRILL_LOG_DIR=/opt/drill/log \

DRILL_CONF_DIR=/opt/drill/conf \

DRILL_DATA_DIR=/data

# Install required core dependencies

RUN apt-get -qq update && \

apt-get install -y ca-certificates -qq && \

apt-get install -y wget -qq && \

apt-get clean

# Prepare Apache Drill environment - user, group, directories

RUN mkdir -p "$DRILL_HOME" "$DRILL_LOG_DIR" "$DRILL_CONF_DIR" "$DRILL_DATA_DIR" && \

groupadd -r "$DRILL_USER" --gid="$DRILL_GID" && \

useradd -r -g "$DRILL_USER" --uid="$DRILL_UID" -d "$DRILL_HOME" "$DRILL_USER" && \

chown -R "$DRILL_USER":"$DRILL_USER" "$DRILL_HOME" && \

chown -R "$DRILL_USER":"$DRILL_USER" "$DRILL_DATA_DIR"

# Install Apache Drill software

RUN wget -q "https://www.apache.org/dist/drill/$DRILL_VERSION/apache-drill-$DRILL_VERSION.tar.gz" -P /tmp && \

tar -xzf "/tmp/apache-drill-$DRILL_VERSION.tar.gz" -C /tmp && \

mv "/tmp/apache-drill-$DRILL_VERSION/"* "$DRILL_HOME" && \

rm -rf "/tmp/apache-drill-$DRILL_VERSION.tar.gz" "/tmp/apache-drill-$DRILL_VERSION"

# Set the Home directory

WORKDIR $DRILL_HOME

# Expose the desired network ports

EXPOSE 8047 31010 31011 31012

# Set the PATH variable

ENV PATH=$PATH:$DRILL_HOME/bin

# Define volume mount point(s)

VOLUME $DRILL_CONF_DIR

VOLUME $DRILL_LOG_DIR

VOLUME $DRILL_DATA_DIR

To build the cutom docker image, execute the following command:

$ docker build -t bswamina/apache-drill:1.20.2-openjdk-11 .

The following would be the typical output:

Sending build context to Docker daemon 65.42MB Step 1/12 : FROM adoptopenjdk/openjdk11:ubuntu-slim ubuntu-slim: Pulling from adoptopenjdk/openjdk11 fb0b3276a519: Pull complete 6545d0570982: Pull complete aea04a60246f: Pull complete 4834d3313655: Pull complete Digest: sha256:7fafb2739d77ab5b8a31ed13b12af7d5d0bfb90dbd88e484bd4ec8d2a34791a3 Status: Downloaded newer image for adoptopenjdk/openjdk11:ubuntu-slim ---> 24c3d508d46a Step 2/12 : LABEL Version="1.0" Author="Bhaskar.S" Email="bswamina@polarsparc.com" ---> Running in d1951aa8807c Removing intermediate container d1951aa8807c ---> f3275f626c56 Step 3/12 : ENV DRILL_VERSION=1.20.2 DRILL_USER=drill DRILL_GID=1000 DRILL_UID=1000 DRILL_HOME=/opt/drill DRILL_PID_DIR=/opt/drill DRILL_LOG_DIR=/opt/drill/log DRILL_CONF_DIR=/opt/drill/conf DRILL_DATA_DIR=/data ---> Running in 0292d7a5e273 Removing intermediate container 0292d7a5e273 ---> 7ee83052f5dd Step 4/12 : RUN apt-get -qq update && apt-get install -y ca-certificates -qq && apt-get install -y wget -qq && apt-get clean ---> Running in a6f6b3664add debconf: delaying package configuration, since apt-utils is not installed Selecting previously unselected package wget. (Reading database ... 7582 files and directories currently installed.) Preparing to unpack .../wget_1.20.3-1ubuntu2_amd64.deb ... Unpacking wget (1.20.3-1ubuntu2) ... Setting up wget (1.20.3-1ubuntu2) ... Removing intermediate container a6f6b3664add ---> 64fe00a5160d Step 5/12 : RUN mkdir -p "$DRILL_HOME" "$DRILL_LOG_DIR" "$DRILL_CONF_DIR" "$DRILL_DATA_DIR" && groupadd -r "$DRILL_USER" --gid="$DRILL_GID" && useradd -r -g "$DRILL_USER" --uid="$DRILL_UID" -d "$DRILL_HOME" "$DRILL_USER" && chown -R "$DRILL_USER":"$DRILL_USER" "$DRILL_HOME" && chown -R "$DRILL_USER":"$DRILL_USER" "$DRILL_DATA_DIR" ---> Running in a890485d08cb Removing intermediate container a890485d08cb ---> 8204557d644e Step 6/12 : RUN wget -q "https://www.apache.org/dist/drill/$DRILL_VERSION/apache-drill-$DRILL_VERSION.tar.gz" -P /tmp && tar -xzf "/tmp/apache-drill-$DRILL_VERSION.tar.gz" -C /tmp && mv "/tmp/apache-drill-$DRILL_VERSION/"* "$DRILL_HOME" && rm -rf "/tmp/apache-drill-$DRILL_VERSION.tar.gz" "/tmp/apache-drill-$DRILL_VERSION" ---> Running in e50d9a975442 Removing intermediate container e50d9a975442 ---> 5a0bca80a5d4 Step 7/12 : WORKDIR $DRILL_HOME ---> Running in 61e8365ba71b Removing intermediate container 61e8365ba71b ---> f99f64cd5dd3 Step 8/12 : EXPOSE 8047 31010 31011 31012 ---> Running in 913afc603967 Removing intermediate container 913afc603967 ---> 27e5b35764c9 Step 9/12 : ENV PATH=$PATH:$DRILL_HOME/bin ---> Running in b65837c9d0dd Removing intermediate container b65837c9d0dd ---> 62885db6dda4 Step 10/12 : VOLUME $DRILL_CONF_DIR ---> Running in 0e5158b975f0 Removing intermediate container 0e5158b975f0 ---> 1b68e4deec81 Step 11/12 : VOLUME $DRILL_LOG_DIR ---> Running in 92c467e06110 Removing intermediate container 92c467e06110 ---> a9ae6e40f187 Step 12/12 : VOLUME $DRILL_DATA_DIR ---> Running in 5dedba421c2d Removing intermediate container 5dedba421c2d ---> 4ae42602654c Successfully built 4ae42602654c Successfully tagged bswamina/apache-drill:1.20.2-openjdk-11

To list all the docker images, execute the following command:

$ docker images

The following would be the typical output:

REPOSITORY TAG IMAGE ID CREATED SIZE bswamina/apache-drill 1.20.2-openjdk-11 104e2dbc7c32 49 seconds ago 876MB adoptopenjdk/openjdk11 ubuntu-slim 6c88963921ad 3 days ago 372MB

To ensure the docker image was built right, execute the following command:

$ docker run --rm bswamina/apache-drill:1.20.2-openjdk-11 ls /opt/drill/bin

The following would be the typical output:

auto-setup.sh drill-am.sh drillbit.sh drill-conf drill-config.sh drill-embedded drill-embedded.bat drill-localhost drill-on-yarn.sh hadoop-excludes.txt runbit sqlline sqlline.bat submit_plan yarn-drillbit.sh

One can pull the docker image from the Docker Hub repository using the following command:

docker pull bswamina/apache-drill:1.20.2-openjdk-11

Now, we shift gears to setup the configuration files and the data files.

The following is the config file logback.xml that is used to control logging by Apache Drill and is located in $HOME/drill/conf:

<?xml version="1.0" encoding="UTF-8" ?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n

</pattern>

</encoder>

</appender>

<appender name="QUERY" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.query.path}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>${log.query.path}.%i</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>10</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>100MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%msg%n</pattern>

</encoder>

</appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${log.path}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>${log.path}.%i</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>10</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>100MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%date{ISO8601} [%thread] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<logger name="org.apache.drill" additivity="false">

<level value="info" />

<appender-ref ref="FILE" />

</logger>

<logger name="query.logger" additivity="false">

<level value="info" />

<appender-ref ref="QUERY" />

</logger>

<root>

<level value="error" />

<appender-ref ref="STDOUT" />

</root>

</configuration>

Under the hood Apache Drill uses another framework called Apache Calcite. The following is the config file saffron.properties that is used to control the character set and is located in $HOME/drill/conf:

# # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # # This properties file is used by Apache Calcite to define allowed charset in string literals, # which is by default ISO-8859-1. # Current configuration allows parsing UTF-8 by default, i.e. queries that contain utf-8 string literal. # To take affect this file should be present in classpath. saffron.default.charset=UTF-16LE saffron.default.nationalcharset=UTF-16LE saffron.default.collation.name=UTF-16LE$en_US

The following is the config file drill-override.conf that is used to configure Apache Drill related options and is located in $HOME/drill/conf:

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

drill.exec: {

cluster-id: "dev-drillbit",

zk.connect: "localhost:2181",

sys.store.provider.local.path="/opt/drill/conf"

}

Finally, the following is the config file storage-plugins-override.conf that is used to configure the various storage options in Apache Drill and is located in $HOME/drill/conf:

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

"storage": {

dfs: {

type: "file",

connection: "file:///",

workspaces: {

"data_pq": {

"location": "/data/parquet/",

"writable": false,

"defaultInputFormat": "parquet",

"allowAccessOutsideWorkspace": false

},

"data_csv": {

"location": "/data/csv/",

"writable": false,

"defaultInputFormat": "csv",

"allowAccessOutsideWorkspace": false

}

},

formats: {

"parquet": {

"type": "parquet"

},

"csv": {

"type": "text",

"extensions": [

"csv"

],

"extractHeader": true,

"delimiter": ","

}

},

enabled: true

}

}

For our demonstration, we will only leverage the data sets stored in csv and parquet formats in the local filesystem (the dfs storage).

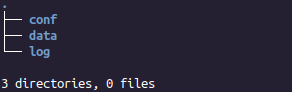

We will copy few csv and parquet files into the appropriate locations in $HOME/drill/data.

The data sets used in this article are from the following two sources: 1. Palmer Penguins 2. Device Monitoring

The following illustration depicts the various data files in the different directories:

Now, it is time to copy the following files into the appropriate destination as indicated below:

The file Penguins CSV to $HOME/drill/data/csv/D1

The file Penguins Parquet to $HOME/drill/data/parquet/D1

The file Device Info CSV to $HOME/drill/data/csv/D2

Unzip the file Device Readings CSV ZIP to $HOME/drill/data/csv/D2

The file Device Info Parquet to $HOME/drill/data/parquet/D2

The file Device Readings Parquet to $HOME/drill/data/parquet/D3

At this point, we are done with all the required setup.

To start Apache Drill in the embedded mode (single node mode), execute the following command:

$ docker run -it --rm --name drill -u $(id -u ${USER}):$(id -g ${USER}) -p 8047:8047 -p 31010:31010 -v /home/polarsparc/drill/conf:/opt/drill/conf -v /home/polarsparc/drill/log:/opt/drill/log -v /home/polarsparc/drill/data:/data bswamina/apache-drill:1.20.2-openjdk-11 /opt/drill/bin/drill-embedded

The following would be the typical output:

"A Drill is a terrible thing to waste." apache drill>

Notice the prompt changes to apache drill>.

Hands-on with Drill

To ensure Drill has started properly in the embedded mode, execute the following command:

apache drill> select version from sys.version;

The following would be the typical output:

+---------+ | version | +---------+ | 1.20.2 | +---------+ 1 row selected (1.326 seconds)

We will begin by accessing the penguins parquet file via Apache Drill. Parquet files are stored locally on the filesystem. This means we use the dfs storage plugin. Also, the parquet files are stored in the directory $HOME/drill/data/parquet which is defined under the workspace of data_pq. So, the schema to use is dfs.data_pq.

To switch to the schema dfs.data_pq, execute the following command:

apache drill> use dfs.data_pq;

The following would be the typical output:

+------+-----------------------------------------+ | ok | summary | +------+-----------------------------------------+ | true | Default schema changed to [dfs.data_pq] | +------+-----------------------------------------+ 1 row selected (0.249 seconds) apache drill (dfs.data_pq)>

Notice the prompt changes to apache drill (dfs.data_pq)>.

To display all the file systems visible to drill for query purposes, execute the following command:

apache drill (dfs.data_pq)> show files;

The following would be the typical output:

+------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ | name | isDirectory | isFile | length | owner | group | permissions | accessTime | modificationTime | +------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ | D1 | true | false | 4096 | drill | drill | rwxrwxr-x | 2022-10-14 19:16:13.325 | 2022-10-14 19:33:12.247 | | D3 | true | false | 4096 | drill | drill | rwxrwxr-x | 2022-10-14 19:18:49.013 | 2022-10-14 19:33:13.585 | | D2 | true | false | 4096 | drill | drill | rwxrwxr-x | 2022-10-14 19:21:42.013 | 2022-10-14 19:33:14.301 | +------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ 3 rows selected (0.152 seconds)

To display all the schemas available to drill for access, execute the following command:

apache drill (dfs.data_pq)> show schemas;

The following would be the typical output:

+--------------------+ | SCHEMA_NAME | +--------------------+ | dfs.data_csv | | dfs.data_pq | | dfs.default | | information_schema | | sys | | cp.default | +--------------------+ 6 rows selected (0.308 seconds)

To display the count of records in the penguins parquet file, execute the following command:

apache drill (dfs.data_pq)> select count(*) as `Count` from dfs.data_pq.`/D1/*.parquet`;

The following would be the typical output:

+-------+ | Count | +-------+ | 342 | +-------+ 1 row selected (0.777 seconds)

There is a weird bug in Apache Drill. Trying to execute the following SQL results in an error: select count(*) from dfs.data_pq.`/D1/penguins.parquet`; The generated error is: Error: VALIDATION ERROR: From line 1, column 22 to line 1, column 24: Object '/D1/penguins.parquet' not found [Error Id: 5618fe46-4615-4169-b651-55e4b007293a ] (state=,code=0)

To display all the penguin species and their body mass from the penguins parquet file where their body mass is greater than or equal to 5750, execute the following command:

apache drill (dfs.data_pq)> select `species`, `body_mass_g` from dfs.data_pq.`/D1/*.parquet` where `body_mass_g` >= 5750 order by `body_mass_g`;

The following would be the typical output:

+---------+-------------+ | species | body_mass_g | +---------+-------------+ | Gentoo | 5750.0 | | Gentoo | 5800.0 | | Gentoo | 5800.0 | | Gentoo | 5850.0 | | Gentoo | 5850.0 | | Gentoo | 5850.0 | | Gentoo | 5950.0 | | Gentoo | 5950.0 | | Gentoo | 6000.0 | | Gentoo | 6000.0 | | Gentoo | 6050.0 | | Gentoo | 6300.0 | +---------+-------------+ 12 rows selected (0.563 seconds)

To display the average body mass grouped by the penguin species from the penguins parquet file, execute the following command:

apache drill (dfs.data_pq)> select p.species, avg(p.body_mass_g) as `mean_body_mass_g` from dfs.data_pq.`/D1/*.parquet` as p group by p.species;

The following would be the typical output:

+-----------+--------------------+ | species | mean_body_mass_g | +-----------+--------------------+ | Adelie | 3700.662251655629 | | Gentoo | 5076.016260162602 | | Chinstrap | 3733.0882352941176 | +-----------+--------------------+ 3 rows selected (0.215 seconds)

Moving on to the more complex case of joining multiple sources (two parquet files from the device monitoring data set).

To display the time, the model, the cpu usage, and the memory usage on devices from the device monitoring parquet files for a limit of 10 rows, execute the following command:

apache drill (dfs.data_pq)> select `b`.`time`, `a`.`model`, `b`.`cpu_avg_5min`, `b`.`mem_used` from `/D2/*.parquet` as `a` inner join `/D3/*.parquet` as `b` on `a`.`device_id` = `b`.`device_id` limit 10;

The following would be the typical output:

+------------------------+---------+--------------+-----------+ | time | model | cpu_avg_5min | mem_used | +------------------------+---------+--------------+-----------+ | 2016-11-15 07:00:00-05 | pinto | 10.802 | 589988922 | | 2016-11-15 07:00:00-05 | mustang | 8.106 | 279257668 | | 2016-11-15 07:00:00-05 | focus | 8.2 | 350418942 | | 2016-11-15 07:00:00-05 | pinto | 5.594 | 450422930 | | 2016-11-15 07:00:00-05 | mustang | 5.13 | 370403299 | | 2016-11-15 07:00:00-05 | focus | 7.572 | 590376778 | | 2016-11-15 07:00:00-05 | pinto | 7.362 | 350314519 | | 2016-11-15 07:00:00-05 | mustang | 12.4 | 590887970 | | 2016-11-15 07:00:00-05 | focus | 7.232 | 589266926 | | 2016-11-15 07:00:00-05 | pinto | 11.014 | 460684425 | +------------------------+---------+--------------+-----------+ 10 rows selected (3.179 seconds)

There is a weird bug in Apache Drill. Trying to execute the following SQL results in an error:

select b.time, a.model, b.cpu_avg_5min, b.mem_used from `/D2/*.parquet` as a inner join `/D3/*.parquet` as b on a.device_id = b.device_id limit 10;

The generated error is:

Error: PARSE ERROR: Encountered "." at line 1, column 9.

SQL Query: select b.time, a.model, b.cpu_avg_5min, b.mem_used from `/D2/*.parquet` as a inner join `/D3/*.parquet` as b on a.device_id = b.device_id limit 10

^

[Error Id: 7e5a87f7-9dbf-4faf-b291-5e86cc26c0d7 ] (state=,code=0)

It is now time to switch gears into the csv space. To switch to the schema dfs.data_csv, execute the following command:

apache drill> use dfs.data_csv;

The following would be the typical output:

+------+------------------------------------------+ | ok | summary | +------+------------------------------------------+ | true | Default schema changed to [dfs.data_csv] | +------+------------------------------------------+ 1 row selected (0.132 seconds) apache drill (dfs.data_csv)>

Notice the prompt changes to apache drill (dfs.data_csv)>.

To display all the file systems visible to drill for query purposes, execute the following command:

apache drill (dfs.data_csv)> show files;

The following would be the typical output:

+------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ | name | isDirectory | isFile | length | owner | group | permissions | accessTime | modificationTime | +------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ | D1 | true | false | 4096 | drill | drill | rwxrwxr-x | 2022-10-14 19:42:16.223 | 2022-10-14 19:42:16.319 | | D2 | true | false | 4096 | drill | drill | rwxrwxr-x | 2022-10-14 19:42:17.738 | 2022-10-14 19:42:17.794 | +------+-------------+--------+--------+-------+-------+-------------+-------------------------+-------------------------+ 2 rows selected (0.126 seconds)

To display the penguin species and their body mass from the penguins csv file and limit the number of records to 10, execute the following command:

apache drill (dfs.data_csv)> select `species`, `body_mass_g` from `/D1/device_info.csv` limit 10;

The following would be the typical output:

+---------+-------------+ | species | body_mass_g | +---------+-------------+ | Gentoo | 5750.0 | | Gentoo | 5800.0 | | Gentoo | 5800.0 | | Gentoo | 5850.0 | | Gentoo | 5850.0 | | Gentoo | 5850.0 | | Gentoo | 5950.0 | | Gentoo | 5950.0 | | Gentoo | 6000.0 | | Gentoo | 6000.0 | | Gentoo | 6050.0 | | Gentoo | 6300.0 | +---------+-------------+ 12 rows selected (0.563 seconds)

Notice how one is able to refer to the actual data file with csv file(s). This is an issue with the parquet file(s)

Now, for the final act of joining multiple sources (a csv file and a parquet file from the device monitoring data set).

To display the time, the model, the cpu usage, and the memory usage on devices from the device info parquet file and the device readings csv file for a limit of 10 rows, execute the following command:

apache drill (dfs.data_csv)> select `b`.`time`, `a`.`model`, `b`.`cpu_avg_5min`, `b`.`mem_used` from dfs.`/data/parquet/D2/device_info.parquet` as `a` inner join dfs.`/data/csv/D2/device_readings.csv` as `b` on `a`.`device_id` = `b`.`device_id` limit 10;

The following would be the typical output:

+------------------------+---------+--------------+-----------+ | time | model | cpu_avg_5min | mem_used | +------------------------+---------+--------------+-----------+ | 2016-11-15 07:00:00-05 | pinto | 10.802 | 589988922 | | 2016-11-15 07:00:00-05 | mustang | 8.106 | 279257668 | | 2016-11-15 07:00:00-05 | focus | 8.2 | 350418942 | | 2016-11-15 07:00:00-05 | pinto | 5.594 | 450422930 | | 2016-11-15 07:00:00-05 | mustang | 5.13 | 370403299 | | 2016-11-15 07:00:00-05 | focus | 7.572 | 590376778 | | 2016-11-15 07:00:00-05 | pinto | 7.362 | 350314519 | | 2016-11-15 07:00:00-05 | mustang | 12.4 | 590887970 | | 2016-11-15 07:00:00-05 | focus | 7.232 | 589266926 | | 2016-11-15 07:00:00-05 | pinto | 11.014 | 460684425 | +------------------------+---------+--------------+-----------+ 10 rows selected (0.48 seconds)

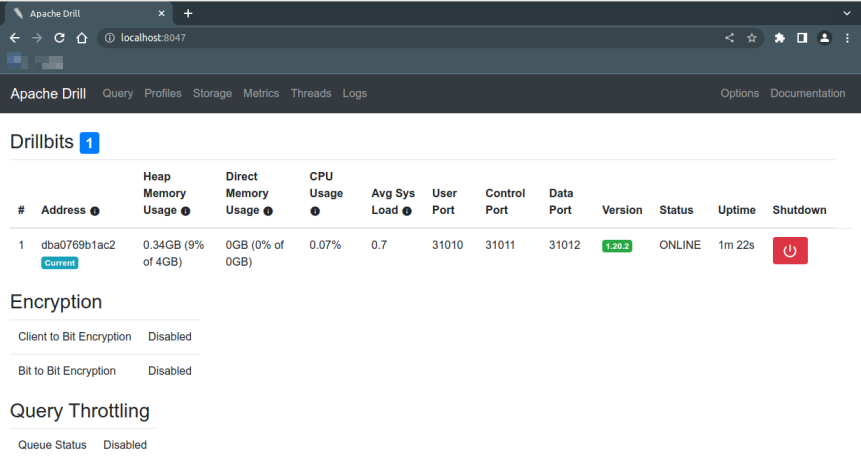

One can access the web interface of Apache Drill by launching the url http://localhost:8047 in a web browser.

The following illustration depicts the Apache Drill web interface:

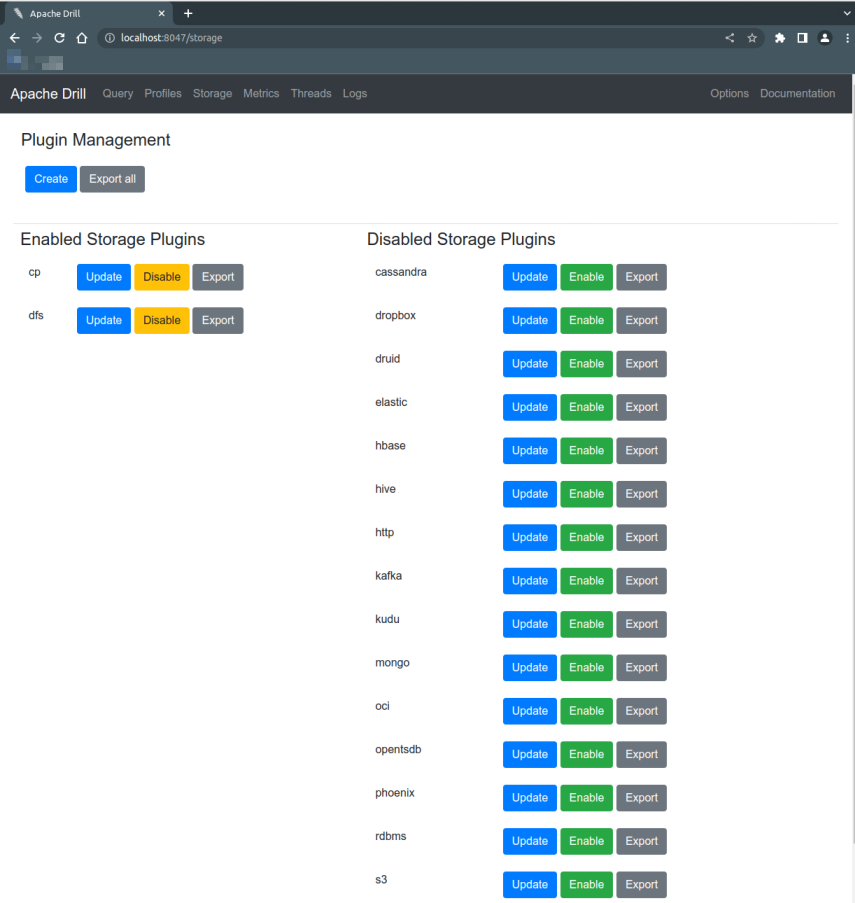

Clicking on the Storage option from the navigation bar will land one in the storage options page as shown in the illustration below:

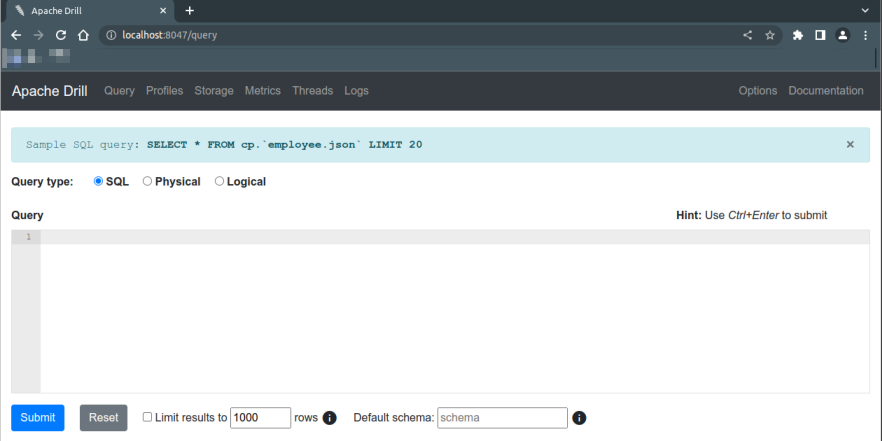

Clicking on the Query option from the navigation bar will land one in the query execution page as shown in the illustration below:

To quit from the drill command-line query interface, execute the following command:

apache drill (dfs.data_csv)> !quit

The following is the link to the Github Repo that provides the config and data files used in this article:

References