Download the latest stable version of Hadoop 1.x from the project site located at the URL hadoop.apache.org

The current stable 1.x version at this time is the release 1.2.1.

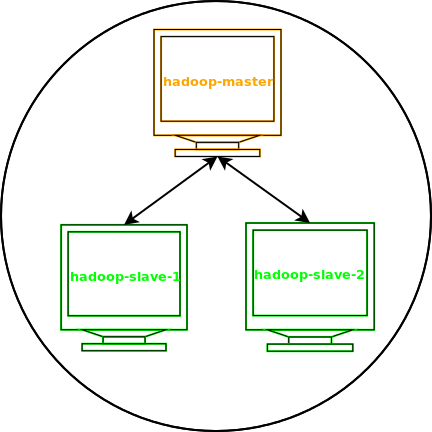

We will install Hadoop in a 3-node Ubuntu 13.10 based cluster. The following is how we will name the three nodes:

-

hadoop-master

-

hadoop-slave-1

-

hadoop-slave-2

The following diagram illustrates our 3-node Hadoop cluster:

Following are the steps to install and setup Hadoop on all the 3 nodes in the cluster:

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Ensure Java SE 6 or above is installed

In my case, openjdk7 (Java SE 7) was installed in the directory /usr/lib/jvm/default-jvm

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Ensure openssh-server is installed. Else, use the following command to install:

sudo apt-get install openssh-server

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Ensure the user hadoop exists. Else, use the following commands to create the user:

sudo groupadd hadoop

sudo useradd -g hadoop hadoop

sudo passwd hadoop

sudo usermod -a -G sudo hadoop

sudo mkdir -p /home/hadoop

sudo chown -R hadoop:hadoop /home/hadoop

sudo useradd -D --base-dir /home/hadoop --shell /bin/bash

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Login as user hadoop. You should be in the home directory /home/hadoop

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Extract the downloaded package hadoop-1.2.1-bin.tar.gz under the home directory /home/hadoop. The extracted package will be in the sub-directory hadoop-1.2.1

Execute the following command:

export HADOOP_PREFIX=/home/hadoop/hadoop-1.2.1

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Hadoop uses ssh between the nodes in the cluster. We need to setup password-less ssh access. For this we need to generate the ssh public key for each of the nodes. Execute the following commands:

ssh-keygen -t rsa -P ""

cat /home/hadoop/.ssh/id_rsa.pub >> /home/hadoop/.ssh/authorized_keys

-

On hadoop-master:

The node hadoop-master needs password-less access to hadoop-slave-1 and hadoop-slave-2. For this we need to distribute the ssh public key of hadoop-master to the other two nodes. Execute the following commands:

ssh-copy-id -i /home/hadoop/.ssh/id_rsa.pub hadoop@hadoop-slave-1

ssh-copy-id -i /home/hadoop/.ssh/id_rsa.pub hadoop@hadoop-slave-2

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Add the node hadoop-master to the configuration file $HADOOP_PREFIX/conf/masters. For this execute the following command:

echo 'hadoop-master' > $HADOOP_PREFIX/conf/masters

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Add the nodes hadoop-slave-1 and hadoop-slave-2 to the configuration file $HADOOP_PREFIX/conf/slaves. For this execute the following commands:

echo 'hadoop-slave-1' > $HADOOP_PREFIX/conf/slaves

echo 'hadoop-slave-2' >> $HADOOP_PREFIX/conf/slaves

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Edit the file $HADOOP_PREFIX/conf/hadoop-env.sh using any text editor.

Uncomment the line that begins with # export JAVA_HOME= and change it to look like export JAVA_HOME=/usr/lib/jvm/default-jvm

Next, uncomment the line that begins with # export HADOOP_OPTS= and change it to look like export HADOOP_OPTS=-Djava.net.preferIPv4Stack=true

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Edit the file $HADOOP_PREFIX/conf/core-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_PREFIX/conf/core-site.xml

<property> <name>fs.default.name</name> <value>hdfs://hadoop-master:54310</value> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property>

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Edit the file $HADOOP_PREFIX/conf/mapred-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_PREFIX/conf/mapred-site.xml

<property> <name>mapred.job.tracker</name> <value>hadoop-master:54311</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task. </description> </property>

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Edit the file $HADOOP_PREFIX/conf/hdfs-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_PREFIX/conf/hdfs-site.xml

<property> <name>dfs.replication</name> <value>2</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property>

-

On hadoop-master, hadoop-slave-1, and hadoop-slave-2:

Modify the PATH environment variable by executing the following command:

export PATH=.:$PATH:$HADOOP_PREFIX/bin

-

On hadoop-master:

Just like with any filesystem, one needs to prepare the Hadoop Distributed File System.

To do that, execute the following command:

hadoop namenode -format