We will first install Hadoop 2.x on a Ubuntu 14.04 LTS based Desktop for development and testing purposes.

Following are the steps to install and setup Hadoop 2.x on a single node (localhost):

-

Ensure openssh-server is installed. Else, use the following command to install:

sudo apt-get install openssh-server

-

Hadoop uses ssh to access node(s). We need to setup password-less ssh access. For this we need to generate the ssh public key for each of the node(s). Execute the following commands:

ssh-keygen -t rsa -P ""

cat /home/abc/.ssh/id_rsa.pub >> /home/abc/.ssh/authorized_keys

-

Ensure Java SE 7 or above is installed.

We installed Oracle Java SE 8 on our desktop by issuing the following commands:

$ sudo add-apt-repository ppa:webupd8team/java

$ sudo apt-get update

$ sudo apt-get install oracle-java8-installer

$ sudo apt-get install oracle-java8-set-default

-

Download the latest stable version of Hadoop 2.x from the project site located at the URL hadoop.apache.org

The current stable 2.x version at this time is the release 2.6.0.

Extract the downloaded package hadoop-2.6.0.tar.gz under a desired directory location (for example, /home/abc/products). The extracted package will be in the sub-directory hadoop-2.6.0.

-

Modify the .bashrc file located in the home directory /home/abc and add the following lines to the end of the file and save the changes:

export HADOOP_PREFIX=/home/abc/products/hadoop-2.6.0

export HADOOP_HOME=/home/abc/products/hadoop-2.6.0

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=.:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

Here is a copy of the .bashrc

-

Create a base data directory $HADOOP_HOME/data.

Also, create a logs directory $HADOOP_HOME/logs.

-

Edit the file $HADOOP_CONF_DIR/hadoop-env.sh using any text editor.

Modify the line that begins with export HADOOP_OPTS= and change it to look like export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Djava.library.path=$HADOOP_HOME/lib/native"

Here is a copy of the hadoop-env.sh file

-

Edit the file $HADOOP_CONF_DIR/core-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_CONF_DIR/core-site.xml

<property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/abc/products/hadoop-2.6.0/data</value> </property>

Here is a copy of the core-site.xml file

-

Edit the file $HADOOP_CONF_DIR/hdfs-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_CONF_DIR/hdfs-site.xml

<property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.permissions.superusergroup</name> <value>abc</value> </property> <property> <name>dfs.namenode.http-address</name> <value>localhost:50070</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>localhost:50090</value> </property>

Here is a copy of the hdfs-site.xml file

-

Edit the file $HADOOP_CONF_DIR/yarn-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_CONF_DIR/yarn-site.xml

<property> <name>yarn.resourcemanager.hostname</name> <value>localhost</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property>

Here is a copy of the yarn-site.xml file

-

Make a copy of the file $HADOOP_CONF_DIR/mapred-site.xml.template to $HADOOP_CONF_DIR/mapred-site.xml

Edit the file $HADOOP_CONF_DIR/mapred-site.xml using any text editor.

The contents should look like the following:

Contents of $HADOOP_CONF_DIR/mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>localhost:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>localhost:19888</value> </property>

Here is a copy of the mapred-site.xml file

-

Just like with any filesystem, one needs to prepare the Hadoop Distributed File System (HDFS).

To do that, execute the following command:

hdfs namenode -format

The following is the typical output:

Output.1

14/12/24 16:12:47 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = localhost/127.0.0.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.6.0 STARTUP_MSG: classpath = /home/abc/products/hadoop-2.6.0/etc/hadoop:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/xz-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-el-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-codec-1.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/log4j-1.2.17.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jersey-core-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/asm-3.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jsch-0.1.42.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jetty-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-net-3.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jersey-server-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jsp-api-2.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jersey-json-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jettison-1.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/curator-client-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/xmlenc-0.52.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/hadoop-auth-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/junit-4.11.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/paranamer-2.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-digester-1.8.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-lang-2.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/curator-framework-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/guava-11.0.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/servlet-api-2.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/htrace-core-3.0.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/avro-1.7.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/activation-1.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-cli-1.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/commons-io-2.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/hadoop-annotations-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/gson-2.2.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0-tests.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/hadoop-common-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/common/hadoop-nfs-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/asm-3.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0-tests.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/hdfs/hadoop-hdfs-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/xz-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/asm-3.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/javax.inject-1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/guice-3.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jettison-1.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/activation-1.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-common-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-common-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-api-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-client-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-registry-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar:/home/abc/products/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.0-tests.jar:/home/abc/products/hadoop-2.6.0/contrib/capacity-scheduler/*.jar STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z STARTUP_MSG: java = 1.8.0_25 ************************************************************/ 14/12/24 16:12:47 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 14/12/24 16:12:47 INFO namenode.NameNode: createNameNode [-format] Formatting using clusterid: CID-f9e2be28-f785-4ec8-9d80-638fbf43de96 14/12/24 16:12:48 INFO namenode.FSNamesystem: No KeyProvider found. 14/12/24 16:12:48 INFO namenode.FSNamesystem: fsLock is fair:true 14/12/24 16:12:48 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 14/12/24 16:12:48 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 14/12/24 16:12:48 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 14/12/24 16:12:48 INFO blockmanagement.BlockManager: The block deletion will start around 2014 Dec 29 16:12:48 14/12/24 16:12:48 INFO util.GSet: Computing capacity for map BlocksMap 14/12/24 16:12:48 INFO util.GSet: VM type = 64-bit 14/12/24 16:12:48 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 14/12/24 16:12:48 INFO util.GSet: capacity = 2^21 = 2097152 entries 14/12/24 16:12:48 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 14/12/24 16:12:48 INFO blockmanagement.BlockManager: defaultReplication = 1 14/12/24 16:12:48 INFO blockmanagement.BlockManager: maxReplication = 512 14/12/24 16:12:48 INFO blockmanagement.BlockManager: minReplication = 1 14/12/24 16:12:48 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 14/12/24 16:12:48 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false 14/12/24 16:12:48 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 14/12/24 16:12:48 INFO blockmanagement.BlockManager: encryptDataTransfer = false 14/12/24 16:12:48 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 14/12/24 16:12:48 INFO namenode.FSNamesystem: fsOwner = abc (auth:SIMPLE) 14/12/24 16:12:48 INFO namenode.FSNamesystem: supergroup = abc 14/12/24 16:12:48 INFO namenode.FSNamesystem: isPermissionEnabled = true 14/12/24 16:12:48 INFO namenode.FSNamesystem: HA Enabled: false 14/12/24 16:12:48 INFO namenode.FSNamesystem: Append Enabled: true 14/12/24 16:12:49 INFO util.GSet: Computing capacity for map INodeMap 14/12/24 16:12:49 INFO util.GSet: VM type = 64-bit 14/12/24 16:12:49 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 14/12/24 16:12:49 INFO util.GSet: capacity = 2^20 = 1048576 entries 14/12/24 16:12:49 INFO namenode.NameNode: Caching file names occuring more than 10 times 14/12/24 16:12:49 INFO util.GSet: Computing capacity for map cachedBlocks 14/12/24 16:12:49 INFO util.GSet: VM type = 64-bit 14/12/24 16:12:49 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 14/12/24 16:12:49 INFO util.GSet: capacity = 2^18 = 262144 entries 14/12/24 16:12:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 14/12/24 16:12:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 14/12/24 16:12:49 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 14/12/24 16:12:49 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 14/12/24 16:12:49 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 14/12/24 16:12:49 INFO util.GSet: Computing capacity for map NameNodeRetryCache 14/12/24 16:12:49 INFO util.GSet: VM type = 64-bit 14/12/24 16:12:49 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 14/12/24 16:12:49 INFO util.GSet: capacity = 2^15 = 32768 entries 14/12/24 16:12:49 INFO namenode.NNConf: ACLs enabled? false 14/12/24 16:12:49 INFO namenode.NNConf: XAttrs enabled? true 14/12/24 16:12:49 INFO namenode.NNConf: Maximum size of an xattr: 16384 14/12/24 16:12:49 INFO namenode.FSImage: Allocated new BlockPoolId: BP-666096283-127.0.1.1-1419887569411 14/12/24 16:12:49 INFO common.Storage: Storage directory /home/abc/products/hadoop-2.6.0/data/dfs/name has been successfully formatted. 14/12/24 16:12:49 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 14/12/24 16:12:49 INFO util.ExitUtil: Exiting with status 0 14/12/24 16:12:49 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at sri-sharada/127.0.1.1 ************************************************************/

This command must be executed *ONLY ONCE*.

-

Open up a Terminal window and execute the following command to start the NameNode:

$ hadoop-daemon.sh start namenode

The following is the typical output:

Output.2

starting namenode, logging to /home/abc/products/hadoop-2.6.0/logs/hadoop-hadoop-namenode-localhost.out

NOTE :: To stop the NameNode, execute the following command:

hadoop-daemon.sh stop namenode

Next, we need to start the DataNode.

To do that, execute the following command:

hadoop-daemon.sh start datanode

The following is the typical output:

Output.3

starting datanode, logging to /home/abc/products/hadoop-2.6.0/logs/hadoop-hadoop-datanode-localhost.out

NOTE :: To stop the DataNode, execute the following command:

hadoop-daemon.sh stop datanode

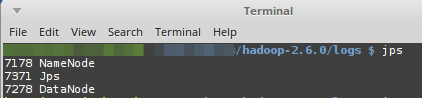

The following screenshot shows the output of executing the jps command:

jps on desktop

jps on desktop -

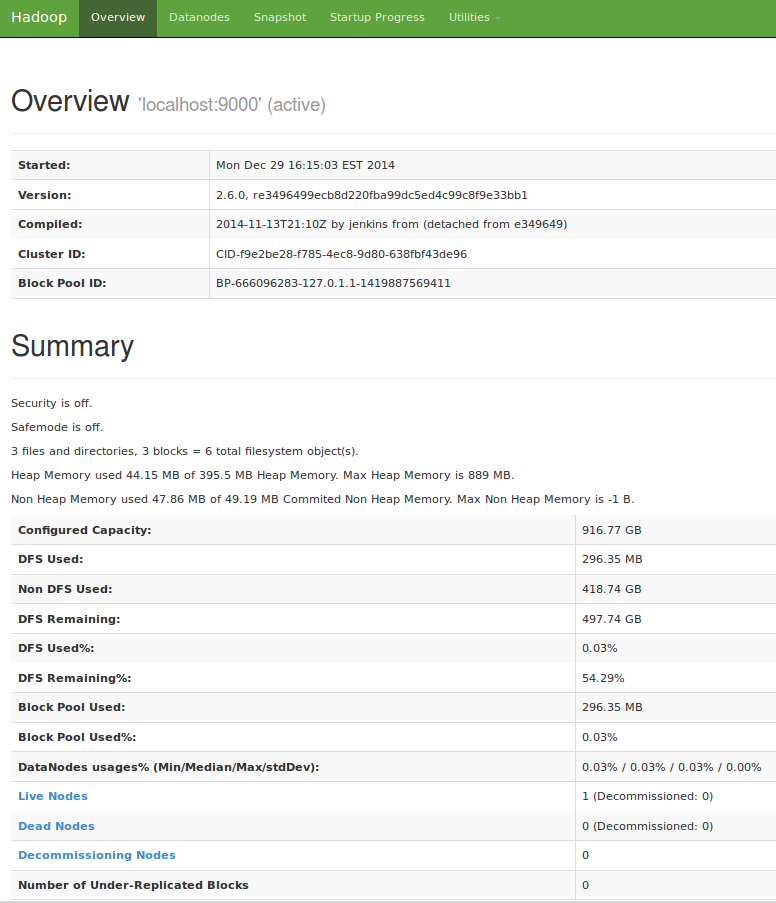

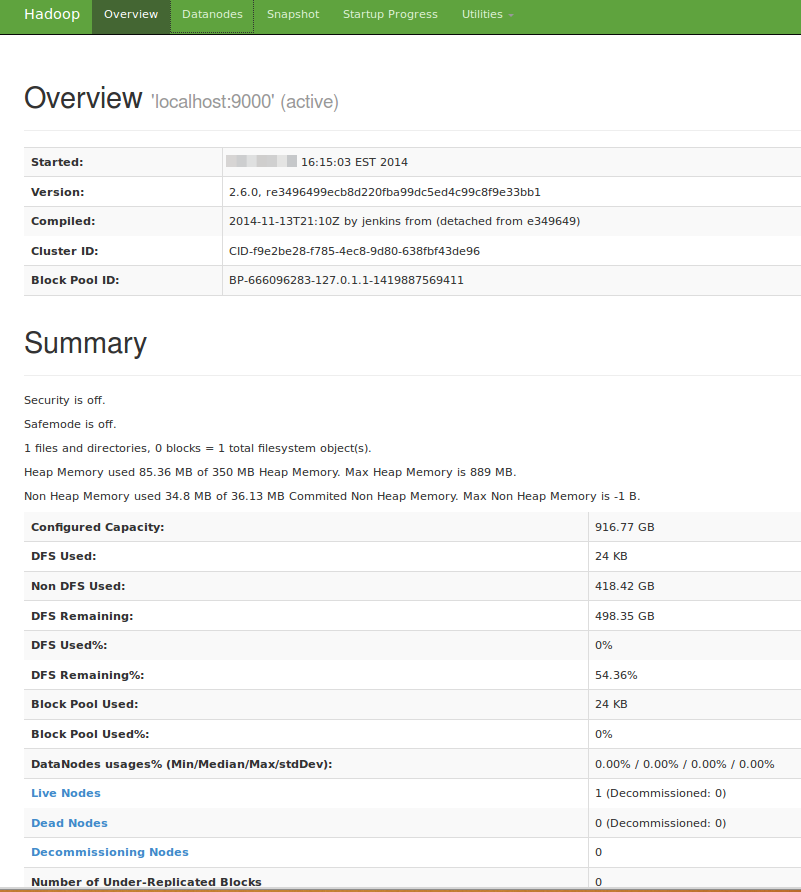

The following screenshot shows the web browser pointing to the NameNode URL http://localhost:50070:

Browse NameNode (localhost)

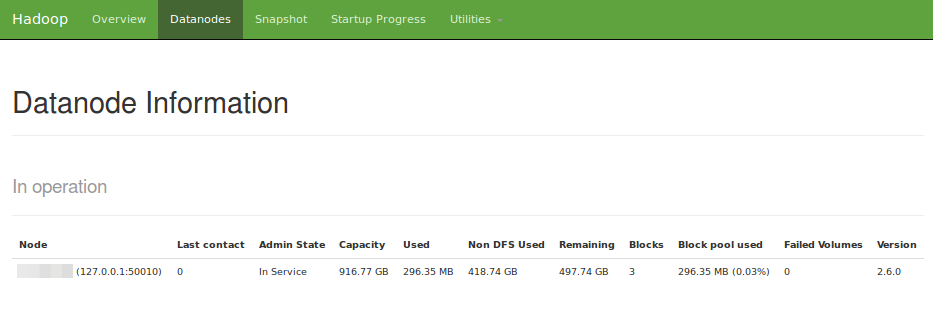

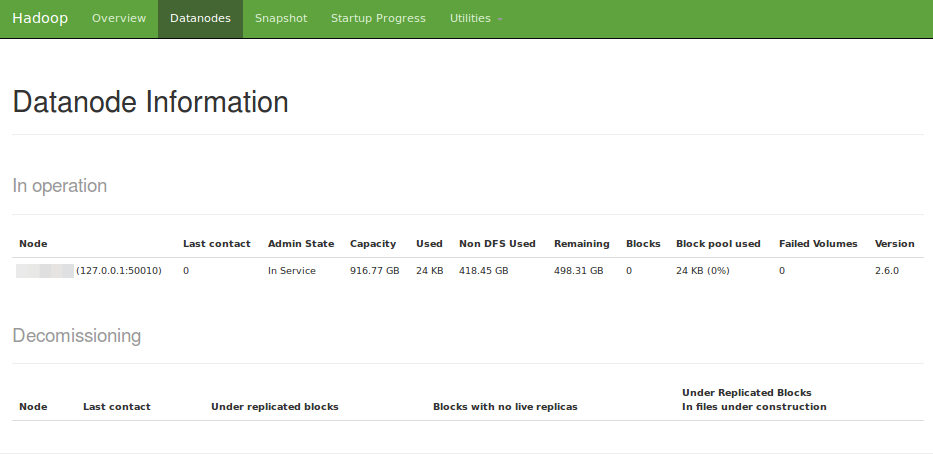

Browse NameNode (localhost)The following screenshot shows the result of clicking on the Datanodes tab:

Datanode Information (localhost)

Datanode Information (localhost) -

NOTE :: One can start all the above mentioned HADOOP daemons (NameNode, SecondaryNameNode, and DataNode) by executing a single command as follows:

start-dfs.sh

To stop all the above mentioned HADOOP daemons, execute the following command:

stop-dfs.sh

-

Now, we need to start the ResourceManager.

To do that, execute the following command:

yarn-daemon.sh start resourcemanager

The following is the typical output:

Output.4

starting resourcemanager, logging to /home/abc/products/hadoop-2.6.0/logs/yarn-hadoop-resourcemanager-localhost.out

NOTE :: To stop the ResourceManager, execute the following command:

yarn-daemon.sh stop resourcemanager

Next, we need to start the NodeManager.

To do that, execute the following command:

yarn-daemon.sh start nodemanager

The following is the typical output:

Output.5

starting nodemanager, logging to /home/abc/products/hadoop-2.6.0/logs/yarn-hadoop-nodemanager-localhost.out

NOTE :: To stop the NodeManager, execute the following command:

yarn-daemon.sh stop nodemanager

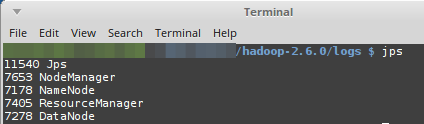

The following screenshot shows the output of executing the jps command:

jps on desktop

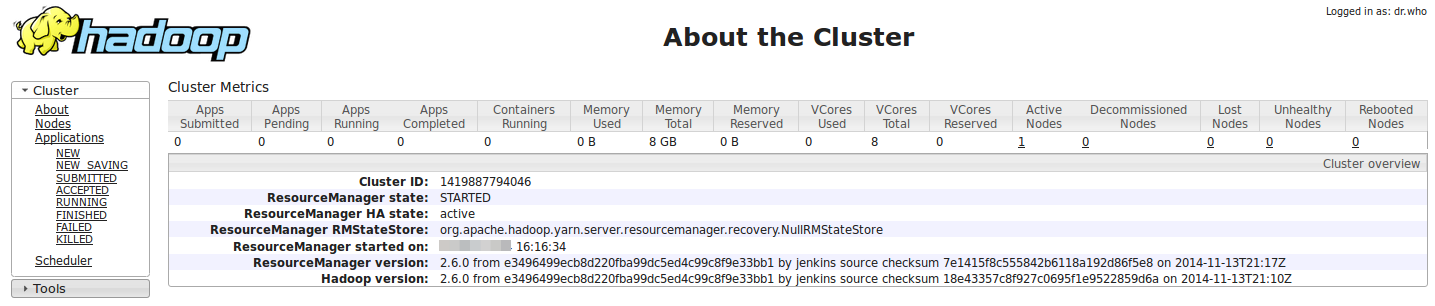

jps on desktopThe following screenshot shows the web browser pointing to the YARN Cluster URL http://localhost:8088/cluster:

YARN Cluster (localhost)

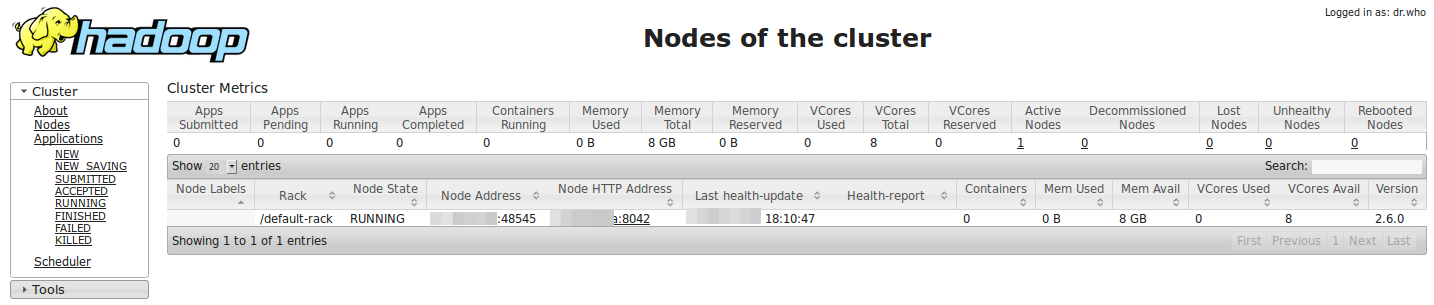

YARN Cluster (localhost)The following screenshot shows the web browser showing the Nodes of the YARN Cluster through the URL http://localhost:8088/cluster/nodes:

Nodes of YARN

Nodes of YARN -

NOTE :: One can start all the above mentioned YARN daemons (ResourceManager and NodeManager) by executing a single command as follows:

start-yarn.sh

To stop all the above mentioned YARN daemons, execute the following command:

stop-yarn.sh