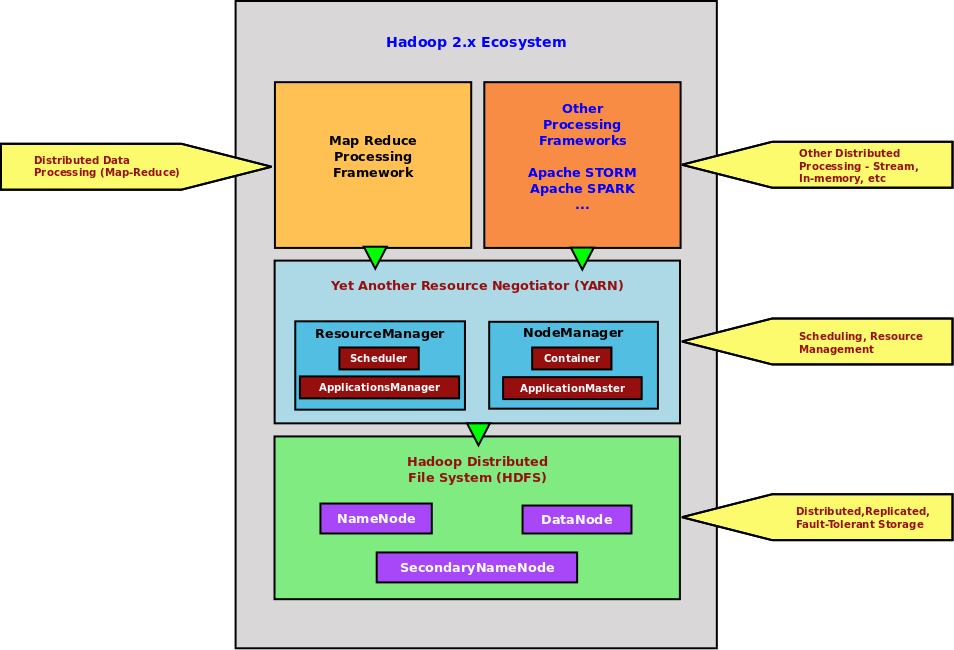

| It is the ultimate authority that governs the

entire cluster and manages the assignment of applications to

underlying cluster resources.

There is one active instance of the ResourceManager and it

typically runs on a master node of the cluster.

ResourceManager HA feature allows two separate nodes in the

cluster to run ResourceManagers with one ResourceManager in an

active state and the other is in a passive standby mode.

The ResourceManager consists of two main components - the Scheduler and the ApplicationsManager.

The Scheduler is responsible for scheduling and allocating

resources to the various applications based on the resource

requirements of the applications. The Scheduler performs its

scheduling function based on the abstract notion of a resource Container which incorporates elements such

as cpu, memory, etc. Currently, only memory is supported.

The Scheduler uses a pluggable architecture for the scheduling

policy. The current implementations are the CapacityScheduler

and the FairScheduler. The

CapacityScheduler is the default.

The ApplicationsManager is responsible

for accepting job-submissions from clients and negotiating the

first Container for executing the per-application specific ApplicationMaster. It provides the service

of monitoring and restarting the ApplicationMaster in an event of

failure

|