| PolarSPARC |

Introduction to Kubernetes (ARM Edition)

| Bhaskar S | 01/21/2019 |

Overview

Kubernetes (or k8s for short) is an extensible open source container orchestration platform designed for managing containerized workloads and services at scale. It helps in automated deployment, scaling, and management of container centric application workloads across a cluster of nodes (bare-metal, virtual, or cloud) by orchestrating compute, network, and storage infrastructure on behalf of those user workloads.

The two main types of nodes in a Kubernetes cluster are:

Master :: a node that acts as the Control Plane for the cluster. It is responsible for all application workload deployment, scheduling, and placement decisions as well as detecting and managing changes to the state of deployed applications

Node(s) :: node(s) that actually run the application containers. They are also on occasions referred to as Minion(s). The master is also a node, but is not targeted for application deployment

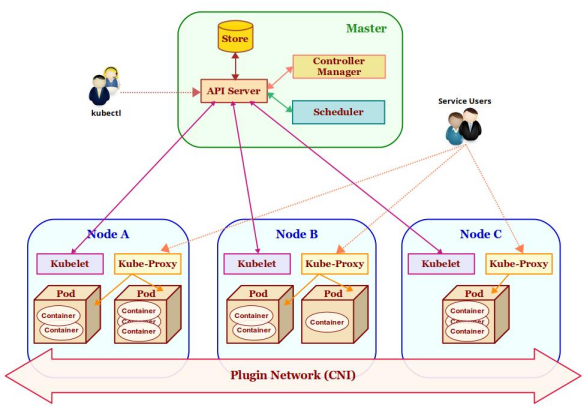

The following Figure-1 illustrates the high-level architectural overview of Kubernetes:

The core components that make Kubernetes cluster are as follows:

Store :: a highly reliable, distributed, and consistent key-value data store used for persisting and maintaining state information about the various components of the Kubernetes cluster. By default, Kubernetes uses etcd as the key-value store

API Server :: acts as the entry point for the Control Plane by exposing an API endpoint for all interactions with and within the Kubernetes cluster. It is through the API Server that requests are made for deployment, administration, management, and operation of container based applications. It uses the key-value store to persist and maintain state information about all the components of the Kubernetes cluster

kubectl :: command line tool used for interfacing with the API Server. Used by administrators (or operators) for deployment and scaling of applications, as well as for management of the Kubernetes cluster

Pod(s) :: One or more containers run inside it. It is the smallest unit of deployment in Kubernetes. Think of it as a logical host with shared network and storage. Application pods are scheduled to run on different nodes of the Kubernetes cluster based on the resource needs and application constraints. Every pod within the cluster gets its own unique ip-address. The application containers within a pod communicate with each other using localhost

kubelet :: an agent that runs on every node of the Kubernetes cluster. It is responsible for creating and starting an application pod on the node and making sure all the application containers are up and running within the pod. In addition, it is also responsible for reporting the state and health of the node, as well as all the running pods to the master via the API server

Scheduler :: responsible for scheduling application pod(s) to run on the selected node(s) of the Kubernetes cluster based on the application resource requirements as well as application specific affinity constraints

Service :: is a logical networking abstraction for the group of pod(s) (based on a label related to an application) running on the node(s) of the Kubernetes cluster. They enable access to an application via service-discovery and spread the requests through load-balancing. To access an application, each service is assigned a cluster-wide internal ip-address:port

Controller Manager :: manages different types of controllers that are responsible for monitoring and detecting changes to the state of the Kubernetes cluster (via the API server) and ensuring that the cluster is moved to the desired state. The different types of controllers are:

Node Controller => responsible for monitoring and detecting the state & health (up or down) of the node(s) in the Kubernetes cluster

ReplicaSet => previously referred to as the Replication Controller and is responsible for maintaining the desired number of pod replicas in the cluster

Endpoints Controller => responsible for detecting and managing changes to the application service access endpoints (list of ip-address:port)

kube-proxy :: a network proxy that runs on each of the node(s) of the Kubernetes cluster and acts as an entry point for access to the various application service endpoints. It routes requests to the appropriate pod(s) in the cluster

Plugin Network :: acts as the bridge (overlay network) that enables communication between the pod(s) running on different node(s) of the cluster. There are different implementations of this component by various 3rd-parties such as calico, flannel, weave-net, etc. They all need to adhere to a common specification called the Container Network Interface or CNI for short

Installation and Setup

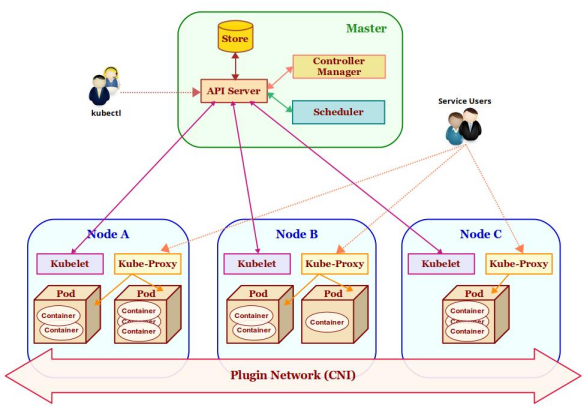

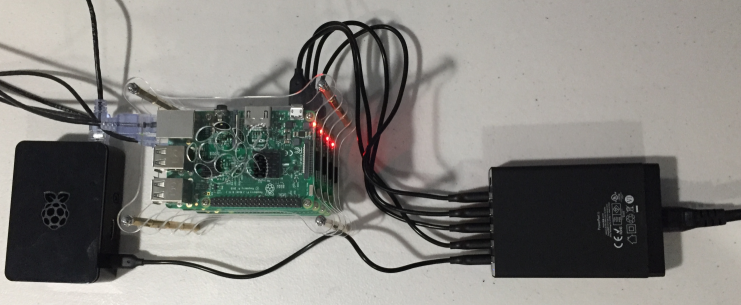

The installation will be on a 5-node Raspberry Pi 3 Cluster running Linux.

The following Figure-2 illustrates the 5-node Raspberry Pi 3 cluster in operation:

For this tutorial, let us assume the 5-nodes in the cluster to have the following host names and ip addresses:

| Host name | IP Address |

|---|---|

| my-node-1 | 192.168.1.201 |

| my-node-2 | 192.168.1.202 |

| my-node-3 | 192.168.1.203 |

| my-node-4 | 192.168.1.204 |

| my-node-5 | 192.168.1.205 |

Open a Terminal window and open a tab for each of the 5 nodes my-node-1 thru my-node-5. In each of the Terminal tabs, ssh into the corresponding node.

Ensure the version of Docker installed is *17.09*. Else will encounter the following error:

[ERROR SystemVerification]: unsupported docker version: 18.09.0

First we need to install Docker version 17.09 (in a round about way) on each of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following commands:

$ curl -fsSL https://get.docker.com -o get-docker.sh

$ sudo sh get-docker.sh

$ sudo usermod -aG docker $USER

$ sudo systemctl stop docker

$ sudo apt remove docker-ce

$ sudo apt-get install docker-ce=17.09.0~ce-0~raspbian

$ sudo shutdown -r now

Once reboot completes, execute the following command to check everything was ok:

$ docker version

The following would be a typical output:

Client: Version: 17.09.0-ce API version: 1.32 Go version: go1.8.3 Git commit: afdb6d4 Built: Tue Sep 26 22:58:36 2017 OS/Arch: linux/arm Server: Version: 17.09.0-ce API version: 1.32 (minimum version 1.12) Go version: go1.8.3 Git commit: afdb6d4 Built: Tue Sep 26 22:52:15 2017 OS/Arch: linux/arm Experimental: false

Next, we need to disable disk based swap. To do that on each of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following commands:

$ sudo systemctl disable dphys-swapfile

$ sudo dphys-swapfile swapoff

$ sudo dphys-swapfile uninstall

$ sudo update-rc.d dphys-swapfile remove

Next, we need to enable and enforce cpu and memory limits (quotas) in Docker. To do that on each of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following commands:

$ sudo cp /boot/cmdline.txt /boot/cmdline_backup.txt

$ line="$(head -n1 /boot/cmdline.txt) cgroup_enable=cpuset cgroup_enable=memory"

$ echo $line | sudo tee /boot/cmdline.txt

Next, we need to install Kubernetes. To do that on each of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following commands:

$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

$ echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

$ sudo apt-get update

$ sudo apt-get install -y kubeadm

$ sudo reboot now

Once reboot completes, execute the following command to check everything was ok:

$ kubeadm version

The following would be a typical output:

kubeadm version: &version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:33:30Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/arm"}

Then, execute the following command:

$ kubectl version

The following would be a typical output:

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:35:51Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/arm"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

Finally, we need to disable the updates to Docker and Kubernetes. To do that on each of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following command:

$ sudo apt-mark hold kubelet kubeadm kubectl docker-ce

The following would be a typical output:

kubelet set on hold. kubeadm set on hold. kubectl set on hold. docker-ce set on hold.

Thats it !!! This completes the installation and setup process.

Hands-on with Kubernetes

For get started, we need make the node my-node-1 the master node and setup the control plane. To do that, execute the following command on my-node-1:

$ sudo kubeadm init

The following would be a typical output:

[init] Using Kubernetes version: v1.13.2 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [my-node-1 localhost] and IPs [192.168.1.201 127.0.0.1 ::1] [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [my-node-1 localhost] and IPs [192.168.1.201 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [my-node-1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.201] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [kubelet-check] Initial timeout of 40s passed. [apiclient] All control plane components are healthy after 175.514425 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "my-node-1" as an annotation [mark-control-plane] Marking the node my-node-1 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node my-node-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: j99kcz.pfwryj2hhhcnmbrb [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.1.201:6443 --token j99kcz.pfwryj2hhhcnmbrb --discovery-token-ca-cert-hash sha256:af11197f74a71890faa275f42f4e2d6c0e9da2fe34bc360d3c21f83994255d76

In order to use the kubectl command-line tool as a non-root user on the master node (my-node-1), execute the following commands on my-node-1:

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

To list all the node(s) in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get nodes

The following would be a typical output:

NAME STATUS ROLES AGE VERSION my-node-1 NotReady master 12m v1.13.2

We need to install an overlay Plugin Network for inter-pod communication. For our demonstration, we will choose the weave-net implementation. To install the overlay network, execute the following command on my-node-1:

$ kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

The following would be a typical output:

serviceaccount/weave-net created clusterrole.rbac.authorization.k8s.io/weave-net created clusterrolebinding.rbac.authorization.k8s.io/weave-net created role.rbac.authorization.k8s.io/weave-net created rolebinding.rbac.authorization.k8s.io/weave-net created daemonset.extensions/weave-net created

To list all the pod(s) running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get pods --all-namespaces

The following would be a typical output:

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-86c58d9df4-9vdq6 1/1 Running 0 13m kube-system coredns-86c58d9df4-wrhzw 1/1 Running 0 13m kube-system etcd-my-node-1 1/1 Running 0 13m kube-system kube-apiserver-my-node-1 1/1 Running 1 14m kube-system kube-controller-manager-my-node-1 1/1 Running 0 13m kube-system kube-proxy-bsmlw 1/1 Running 0 13m kube-system kube-scheduler-my-node-1 1/1 Running 0 12m kube-system weave-net-s8pp6 2/2 Running 0 66s

As is evident from Output.8 above, we see an instance for API Server, etcd, Controller Manager, Scheduler, and Plugin Network (weave-net) all up and running.

For this tutorial, we desire that nodes my-node-2 thru my-node-5 be the nodes (minions) of the Kubernetes cluster. From Output.5 above, we can determine the kubeadm join command to use. For each of the nodes my-node-2 thru my-node-5 (in their respective Terminal tabs), execute the following command:

$ sudo kubeadm join 192.168.1.201:6443 --token j99kcz.pfwryj2hhhcnmbrb --discovery-token-ca-cert-hash sha256:af11197f74a71890faa275f42f4e2d6c0e9da2fe34bc360d3c21f83994255d76

The following would be a typical output:

[preflight] Running pre-flight checks [discovery] Trying to connect to API Server "192.168.1.201:6443" [discovery] Created cluster-info discovery client, requesting info from "https://192.168.1.201:6443" [discovery] Requesting info from "https://192.168.1.201:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.1.201:6443" [discovery] Successfully established connection with API Server "192.168.1.201:6443" [join] Reading configuration from the cluster... [join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "my-node-2" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

To list all the active nodes in the Kubernetes cluster, execute the following command on my-node-1 (after waiting for about 2 to 3 minutes):

$ kubectl get nodes

The following would be a typical output:

NAME STATUS ROLES AGE VERSION my-node-1 Ready master 20m v1.13.2 my-node-2 Ready <none> 3m56s v1.13.2 my-node-3 Ready <none> 2m39s v1.13.2 my-node-4 Ready <none> 2m29s v1.13.2 my-node-5 Ready <none> 2m20s v1.13.2

To display detailed information about any pod (say the Controller Manager) in the Kubernetes cluster, execute the following command on my-node-1:

$ kubectl describe pod kube-controller-manager-my-node-1 -n kube-system

The following would be a typical output:

Name: kube-controller-manager-my-node-1

Namespace: kube-system

Priority: 2000000000

PriorityClassName: system-cluster-critical

Node: my-node-1/192.168.1.201

Start Time: Sun, 20 Jan 2019 02:16:01 +0000

Labels: component=kube-controller-manager

tier=control-plane

Annotations: kubernetes.io/config.hash: 6e90d91dfeef5212bdb942dae8aa1816

kubernetes.io/config.mirror: 6e90d91dfeef5212bdb942dae8aa1816

kubernetes.io/config.seen: 2019-01-20T02:16:00.660478136Z

kubernetes.io/config.source: file

scheduler.alpha.kubernetes.io/critical-pod:

Status: Running

IP: 192.168.1.201

Containers:

kube-controller-manager:

Container ID: docker://5964861e5ee0fcb489fa915446b7f500c726f7446f6a779ab7b343fe5464a599

Image: k8s.gcr.io/kube-controller-manager:v1.13.2

Image ID: docker-pullable://k8s.gcr.io/kube-controller-manager@sha256:649c68d0798123187c1b1fbad3f4494372f4a6304e6e19f957a03dd2e59bc858

Port: <none>

Host Port: <none>

Command:

kube-controller-manager

--address=127.0.0.1

--authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

--authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

--client-ca-file=/etc/kubernetes/pki/ca.crt

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key

--controllers=*,bootstrapsigner,tokencleaner

--kubeconfig=/etc/kubernetes/controller-manager.conf

--leader-elect=true

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--root-ca-file=/etc/kubernetes/pki/ca.crt

--service-account-private-key-file=/etc/kubernetes/pki/sa.key

--use-service-account-credentials=true

State: Running

Started: Sun, 20 Jan 2019 02:16:04 +0000

Ready: True

Restart Count: 0

Requests:

cpu: 200m

Liveness: http-get http://127.0.0.1:10252/healthz delay=15s timeout=15s period=10s #success=1 #failure=8

Environment: <none>

Mounts:

/etc/ca-certificates from etc-ca-certificates (ro)

/etc/kubernetes/controller-manager.conf from kubeconfig (ro)

/etc/kubernetes/pki from k8s-certs (ro)

/etc/ssl/certs from ca-certs (ro)

/usr/libexec/kubernetes/kubelet-plugins/volume/exec from flexvolume-dir (rw)

/usr/local/share/ca-certificates from usr-local-share-ca-certificates (ro)

/usr/share/ca-certificates from usr-share-ca-certificates (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

ca-certs:

Type: HostPath (bare host directory volume)

Path: /etc/ssl/certs

HostPathType: DirectoryOrCreate

etc-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /etc/ca-certificates

HostPathType: DirectoryOrCreate

flexvolume-dir:

Type: HostPath (bare host directory volume)

Path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

HostPathType: DirectoryOrCreate

k8s-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki

HostPathType: DirectoryOrCreate

kubeconfig:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/controller-manager.conf

HostPathType: FileOrCreate

usr-local-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/local/share/ca-certificates

HostPathType: DirectoryOrCreate

usr-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/share/ca-certificates

HostPathType: DirectoryOrCreate

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute

Events: <none>

To list all the application pod(s) running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get pods

The following would be a typical output:

No resources found.

To list all the service(s) running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get services

The following would be a typical output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16h

To start 2 replicas of the Raspberry Pi compatible httpd server in our Kubernetes cluster, execute the following command on my-node-1:

kubectl run pi-httpd --image=hypriot/rpi-busybox-httpd --port=80 --replicas=2

The following would be a typical output:

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead. deployment.apps/pi-httpd created

Note we named the application deployment as pi-httpd.

To list all the application deployments running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get deployments pi-httpd -o wide

The following would be a typical output:

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR pi-httpd 2/2 2 2 78s pi-httpd hypriot/rpi-busybox-httpd run=pi-httpd

To display detailed information about the application deployment pi-httpd, execute the following command on my-node-1:

$ kubectl describe deployments pi-httpd

The following would be a typical output:

Name: pi-httpd

Namespace: default

CreationTimestamp: Sun, 20 Jan 2019 19:28:20 +0000

Labels: run=pi-httpd

Annotations: deployment.kubernetes.io/revision: 1

Selector: run=pi-httpd

Replicas: 2 desired | 2 updated | 2 total | 2 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: run=pi-httpd

Containers:

pi-httpd:

Image: hypriot/rpi-busybox-httpd

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: pi-httpd-7ddbfdd6b9 (2/2 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 17m deployment-controller Scaled up replica set pi-httpd-7ddbfdd6b9 to 2

To list all the application pod(s) running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get pods -o wide

The following would be a typical output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pi-httpd-7ddbfdd6b9-bnlbt 1/1 Running 0 5m45s 10.36.0.1 my-node-4 <none> <none> pi-httpd-7ddbfdd6b9-rtgd9 1/1 Running 0 5m45s 10.44.0.1 my-node-2 <none> <none>

From Output.17, we see that our application pod(s) have been deployed on the nodes my-node-2 and my-node-4 of our Kubernetes cluster.

To display detailed information about the application pod labeled pi-httpd, execute the following command on my-node-1:

$ kubectl describe pods pi-httpd

The following would be a typical output:

Name: pi-httpd-7ddbfdd6b9-bnlbt

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: my-node-4/192.168.1.204

Start Time: Sun, 20 Jan 2019 19:28:20 +0000

Labels: pod-template-hash=7ddbfdd6b9

run=pi-httpd

Annotations: <none>

Status: Running

IP: 10.36.0.1

Controlled By: ReplicaSet/pi-httpd-7ddbfdd6b9

Containers:

pi-httpd:

Container ID: docker://fc950488400e0d8f5ff948c40a1e456084a74fae2ad130de9feb79bcbc795c31

Image: hypriot/rpi-busybox-httpd

Image ID: docker-pullable://hypriot/rpi-busybox-httpd@sha256:c00342f952d97628bf5dda457d3b409c37df687c859df82b9424f61264f54cd1

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 20 Jan 2019 19:28:27 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-ps5d4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-ps5d4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-ps5d4

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned default/pi-httpd-7ddbfdd6b9-bnlbt to my-node-4

Normal Pulling 10m kubelet, my-node-4 pulling image "hypriot/rpi-busybox-httpd"

Normal Pulled 10m kubelet, my-node-4 Successfully pulled image "hypriot/rpi-busybox-httpd"

Normal Created 10m kubelet, my-node-4 Created container

Normal Started 10m kubelet, my-node-4 Started container

Name: pi-httpd-7ddbfdd6b9-rtgd9

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: my-node-2/192.168.1.202

Start Time: Sun, 20 Jan 2019 19:28:20 +0000

Labels: pod-template-hash=7ddbfdd6b9

run=pi-httpd

Annotations: <none>

Status: Running

IP: 10.44.0.1

Controlled By: ReplicaSet/pi-httpd-7ddbfdd6b9

Containers:

pi-httpd:

Container ID: docker://03723ba3ece33e62e4c4667ab8fd89efe90f718b777ef815a9f5566f392ad9f8

Image: hypriot/rpi-busybox-httpd

Image ID: docker-pullable://hypriot/rpi-busybox-httpd@sha256:c00342f952d97628bf5dda457d3b409c37df687c859df82b9424f61264f54cd1

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 20 Jan 2019 19:28:27 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-ps5d4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-ps5d4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-ps5d4

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned default/pi-httpd-7ddbfdd6b9-rtgd9 to my-node-2

Normal Pulling 10m kubelet, my-node-2 pulling image "hypriot/rpi-busybox-httpd"

Normal Pulled 10m kubelet, my-node-2 Successfully pulled image "hypriot/rpi-busybox-httpd"

Normal Created 10m kubelet, my-node-2 Created container

Normal Started 10m kubelet, my-node-2 Started container

From the Output.18 above, we see the pods are running on nodes my-node-2 and my-node-4 with unique ip-addresses 10.44.0.1 and 10.36.0.1 respectively.

To list all the replicasets for our cluster, execute the following command on my-node-1:

$ kubectl get replicasets -o wide

The following would be a typical output:

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR pi-httpd-7ddbfdd6b9 2 2 2 18m pi-httpd hypriot/rpi-busybox-httpd pod-template-hash=7ddbfdd6b9,run=pi-httpd

To display detailed information about the replicasets for our cluster, execute the following command on my-node-1:

$ kubectl describe replicasets

The following would be a typical output:

Name: pi-httpd-7ddbfdd6b9

Namespace: default

Selector: pod-template-hash=7ddbfdd6b9,run=pi-httpd

Labels: pod-template-hash=7ddbfdd6b9

run=pi-httpd

Annotations: deployment.kubernetes.io/desired-replicas: 2

deployment.kubernetes.io/max-replicas: 3

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/pi-httpd

Replicas: 2 current / 2 desired

Pods Status: 2 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: pod-template-hash=7ddbfdd6b9

run=pi-httpd

Containers:

pi-httpd:

Image: hypriot/rpi-busybox-httpd

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 19m replicaset-controller Created pod: pi-httpd-7ddbfdd6b9-rtgd9

Normal SuccessfulCreate 19m replicaset-controller Created pod: pi-httpd-7ddbfdd6b9-bnlbt

To create a service endpoint (virtual ip-address:port) for the deployed application labeled pi-httpd, so that the application can be accessed from any node on the cluster, execute the following command on my-node-1:

$ kubectl expose deployment pi-httpd --port=8080 --target-port=80 --type=NodePort

The following would be a typical output:

service/pi-httpd exposed

With the above command, kube-proxy watches the master (via the API Server) for any service endpoint creations or modifications and creates a route from the service endpoint ip-address:port to map to the list of ip-address:port of the pod instances deployed for the application.

To list all the service(s) running in Kubernetes cluster, execute the following command on my-node-1:

$ kubectl get services

The following would be a typical output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18h pi-httpd NodePort 10.111.215.159 <none> 8080:31275/TCP 62s

From the Output.22 above, we see the application pi-httpd can be accessed from anywhere in the cluster via the ip-address 10.111.215.159 and port 8080.

To display detailed information about the service endpoint labeled pi-httpd, execute the following command on my-node-1:

$ kubectl describe service pi-httpd

The following would be a typical output:

Name: pi-httpd Namespace: default Labels: run=pi-httpd Annotations: <none> Selector: run=pi-httpd Type: NodePort IP: 10.111.215.159 Port: <unset> 8080/TCP TargetPort: 80/TCP NodePort: <unset> 31275/TCP Endpoints: 10.36.0.1:80,10.44.0.1:80 Session Affinity: None External Traffic Policy: Cluster Events: <none>

From any of the nodes my-node-<N>, where <N> ranges from 1 thru 5, execute the following command:

$ curl http://10.111.215.159:8080

The following would be a typical output:

<html>

<head><title>Pi armed with Docker by Hypriot</title>

<body style="width: 100%; background-color: black;">

<div id="main" style="margin: 100px auto 0 auto; width: 800px;">

<img src="pi_armed_with_docker.jpg" alt="pi armed with docker" style="width: 800px">

</div>

</body>

</html>

With this, we conclude the basic exercises we performed on our Kubernetes cluster.

References