| PolarSPARC |

Introduction to Linear Algebra - Part 3

| Bhaskar S | 02/13/2022 |

In Part 2 of this series, we covered some of the basics of Matrics, in particular, some concepts, basic operations, and system of equations. In this part, we will continue with our journey on Matrices.

System of Linear Equations - 2

There are situations, when one will NOT be able to find a solution for a system of equations.

Let us look at an example to illustrate this case.

| Example-1 | Solve for the following three linear equations: $\begin{align*} & x - y + 2z = 4 \\ & x + z = 6 \\ & 2x - 3y + 5z = 4 \end{align*}$ |

|---|---|

|

Let us convert the three linear equations to an augmented matrix as follows: $\begin{bmatrix} 1 & -1 & 2 &\bigm| & 4 \\ 1 & 0 & 1 &\bigm| & 6 \\ 2 & -3 & 5 &\bigm| & 4 \end{bmatrix}$ Performing the operation $R_2 + (-1)R_1 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & -1 & 2 &\bigm| & 4 \\ 0 & 1 & -1 &\bigm| & 2 \\ 2 & -3 & 5 &\bigm| & 4 \end{bmatrix}$ Performing the operation $R_3 + (-2)R_1 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & -1 & 2 &\bigm| & 4 \\ 0 & 1 & -1 &\bigm| & 2 \\ 0 & -1 & 1 &\bigm| & -4 \end{bmatrix}$ Performing the operation $R_3 + R_2 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & -1 & 2 &\bigm| & 4 \\ 0 & 1 & -1 &\bigm| & 2 \\ 0 & 0 & 0 &\bigm| & -2 \end{bmatrix}$ The last row of the matrix is all ZEROs and indicates $0.z = -2$, which is inconsistent. Hence, this example of system of equations has NO solution. |

|

One can continue the process of the Gaussian elimination on the augmented matrix, which is in a row echelon form to further reduce it to a matrix form, where all the elements are 0s, except for the elements along the diagonal, which are all 1s, shown as follows:

$\begin{bmatrix} 1 & 0 & 0 &\bigm| & p \\ 0 & 1 & 0 &\bigm| & q \\ 0 & 0 & 1 &\bigm| & r \end{bmatrix}$

In other words, the elements of the diagonal must be all ONEs, while all other elements above and below the diagonal must be ZEROs.

This form of augmented matrix is called the Reduced Row Echelon form.

This process of finding the solution for a system of equations, by simplifying a given augmented matrix, to a reduced row echelon form is called Gauss-Jordan Elimination.

Let us look at an example using the Gauss-Jordan elimination.

| Example-2 | Solve for the following three linear equations: $\begin{align*} & x + 4y + 2z = 1 \\ & 2x + 5y + z = 5 \\ & 4x + 10y - z = 1 \end{align*}$ |

|---|---|

|

Let us convert the three linear equations to an augmented matrix as follows: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 2 & 5 & 1 &\bigm| & 5 \\ 4 & 10 & -1 &\bigm| & 1 \end{bmatrix}$ Performing the operation $R_2 + (-2)R_1 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & -3 & -3 &\bigm| & 3 \\ 4 & 10 & -1 &\bigm| & 1 \end{bmatrix}$ Performing the operation $(-\frac{1}{3})R_2 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & 1 & 1 &\bigm| & -1 \\ 4 & 10 & -1 &\bigm| & 1 \end{bmatrix}$ Performing the operation $R_3 + (-4)R_1 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & 1 & 1 &\bigm| & -1 \\ 0 & -6 & -9 &\bigm| & -3 \end{bmatrix}$ Performing the operation $(-\frac{1}{3})R_3 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & 1 & 1 &\bigm| & -1 \\ 0 & 2 & 3 &\bigm| & 1 \end{bmatrix}$ Performing the operation $R_3 + (-2)R_2 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & 1 & 1 &\bigm| & -1 \\ 0 & 0 & 1 &\bigm| & 3 \end{bmatrix}$ Performing the operation $R_2 + (-1)R_3 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & 4 & 2 &\bigm| & 1 \\ 0 & 1 & 0 &\bigm| & -4 \\ 0 & 0 & 1 &\bigm| & 3 \end{bmatrix}$ Performing the operation $R_1 + (-2)R_3 \rightarrow R_1$, we get the following: $\begin{bmatrix} 1 & 4 & 0 &\bigm| & -5 \\ 0 & 1 & 0 &\bigm| & -4 \\ 0 & 0 & 1 &\bigm| & 3 \end{bmatrix}$ Performing the operation $R_1 + (-4)R_2 \rightarrow R_1$, we get the following: $\begin{bmatrix} 1 & 0 & 0 &\bigm| & 11 \\ 0 & 1 & 0 &\bigm| & -4 \\ 0 & 0 & 1 &\bigm| & 3 \end{bmatrix}$ Now, we can derive the solution by mapping the variables to the values directly: $x = 11$, $y = -4$, and $z = 3$. |

|

Inversion of Matrix

The multiplicative Inverse of a matrix $\textbf{A}$, denoted as $\textbf{A}^{-1}$, is another matrix, such that the multiplication of $\textbf{A}$ and its inverse $\textbf{A}^{-1}$ produces an identity matrix. In other words, $\textbf{A}^{-1}\textbf{A} = \mathbf{I}$.

Just like in algebra, if we know the values for the two matrices $\textbf{A}$ and $\textbf{B}$ and we are give $\textbf{A} \textbf{X} = \textbf{B}$, then we can solve for matrix $\textbf{X}$ using the multiplicative inverse $\textbf{A}^{-1}$.

In other words:

$\textbf{A}\textbf{X} = \textbf{B}$

Multiplying both side with the multiplicative inverse $\textbf{A}^{-1}$, we get:

$\textbf{A}^{-1}\textbf{A}\textbf{X} = \textbf{A}^{-1}\textbf{B}$

We know $\textbf{A}^{-1}\textbf{A} = \mathbf{I}$

Therefore, $\mathbf{I}\textbf{X} = \textbf{A}^{-1}\textbf{B}$

We also know $\mathbf{I}\textbf{X} = \textbf{X}$

Hence, $\textbf{X} = \textbf{A}^{-1}\textbf{B}$

Note that ONLY square matrices have multiplicative inverses and not all square matrices have inverses.

The following is an example of a multiplicative inverse for a 2 x 2 square matrix:

$\textbf{A} = \begin{bmatrix} -1 & 2 \\ -1 & 1 \end{bmatrix}$

$\textbf{A}^{-1} = \begin{bmatrix} 1 & -2 \\ 1 & -1 \end{bmatrix}$

$\textbf{A}^{-1}\textbf{A} = \begin{bmatrix} -1 & 2 \\ -1 & 1 \end{bmatrix} \begin{bmatrix} 1 & -2 \\ 1 & -1 \end{bmatrix} = \begin{bmatrix} -1.1+2.1 & -1.-2+2.-1 \\ -1.1+1.1 & -1.-2+1.-1 \end{bmatrix} = \begin{bmatrix} -1+2 & 2-2 \\ -1+1 & 2-1 \end{bmatrix} \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix} = \mathbf{I_2}$

Let us look at an example for finding the matrix multiplicative inverse.

| Example-3 | Find the multiplicative inverse for the matrix: $\textbf{A} = \begin{bmatrix} 1 & 4 \\ -1 & -3 \end{bmatrix}$ |

|---|---|

|

Let $\textbf{A}^{-1} = \begin{bmatrix} a & b \\ c & d \end{bmatrix}$ We know $\textbf{A}^{-1}\textbf{A} = \begin{bmatrix} 1 & 4 \\ -1 & -3 \end{bmatrix}\begin{bmatrix} a & b \\ c & d \end{bmatrix} = \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}$ That is, $\textbf{A}^{-1}\textbf{A} = \begin{bmatrix} a+4c & b+4d \\ -a-3c & -b-3d \end{bmatrix} = \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}$ Equating the corresponding entries we get the following system of equations: $\begin{align*} & a + 4c = 1 \\ & -a - 3c = 0 \\ & b + 4d = 0 \\ & -b - 3d = 1 \end{align*}$ Solving the equations $\begin{align*} & a + 4c = 1 ; -a - 3c = 0 \end{align*}$, we get: $a = -3$ and $c = 1$ Similaryly, solving the equations $\begin{align*} & b + 4d = 0 ; -b - 3d = 1 \end{align*}$, we get: $b = -4$ and $d = 1$ Therefore, $\textbf{A}^{-1} = \begin{bmatrix} -3 & -4 \\ 1 & 1 \end{bmatrix}$ |

|

In the Example-3 above, there were two system of equations to solve - one for a and a; the other for b and d.

The coefficients for both the set of equations are the same - the only difference was in the constant values that come from the corresponding identity matrix elements.

By adjoining the coefficient matrix with the identity matrix, one could use the Gauss-Jordan elimination process on this combined matrix to arrive at the inverse of the coefficient matrix.

We use the Gauss-Jordan elimination on the adjoined matrix in the following example.

| Example-4 | Find the multiplicative inverse for the matrix: $\textbf{A} = \begin{bmatrix} 1 & 4 \\ -1 & -3 \end{bmatrix}$ by adjoining the coefficient matrix with the identity matrix |

|---|---|

|

Given $\textbf{A} = \begin{bmatrix} 1 & 4 \\ -1 & -3 \end{bmatrix}$ The adjoined matrix is $\begin{bmatrix} 1 & 4 & 1 & 0 \\ -1 & -3 & 0 & 1\end{bmatrix}$ We will use the Gauss-Jordan Elimination process on the adjoined matrix. Performing the operation $R_2 + R_1 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & 4 & 1 & 0 \\ 0 & 1 & 1 & 1\end{bmatrix}$ Performing the operation $R_1 + (-4)R_2 \rightarrow R_1$, we get the following: $\begin{bmatrix} 1 & 0 & -3 & -4 \\ 0 & 1 & 1 & 1\end{bmatrix}$ Since the above adjoined matrix is in the form $\mathbf{I}\textbf{A}^{-1}$, we are done In other words, when the identity matrix is swapped to the left in the resulting adjoined matrix as above, we have the multiplicative inverse to the right. Therefore, $\textbf{A}^{-1} = \begin{bmatrix} -3 & -4 \\ 1 & 1 \end{bmatrix}$ |

|

Let us look at an example for finding the matrix multiplicative inverse using matrix adjoining.

| Example-5 | Find the multiplicative inverse for the matrix: $\textbf{A} = \begin{bmatrix} 1 & -1 & 0 \\ 1 & 0 & -1 \\ -6 & 2 & 3 \end{bmatrix}$ |

|---|---|

|

Given $\textbf{A} = \begin{bmatrix} 1 & -1 & 0 \\ 1 & 0 & -1 \\ -6 & 2 & 3 \end{bmatrix}$ The adjoined matrix is $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 1 & 0 & -1 & 0 & 1 & 0 \\ -6 & 2 & 3 & 0 & 0 & 1 \end{bmatrix}$ We will use the Gauss-Jordan Elimination process on the adjoined matrix. Performing the operation $R_2 + (-1)R_1 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 0 & 1 & -1 & -1 & 1 & 0 \\ -6 & 2 & 3 & 0 & 0 & 1 \end{bmatrix}$ Performing the operation $R_3 + 6R_1 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 0 & 1 & -1 & -1 & 1 & 0 \\ 0 & -4 & 3 & 6 & 0 & 1 \end{bmatrix}$ Performing the operation $R_3 + 4R_2 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 0 & 1 & -1 & -1 & 1 & 0 \\ 0 & 0 & -1 & 2 & 4 & 1 \end{bmatrix}$ Performing the operation $(-1)R_3 \rightarrow R_3$, we get the following: $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 0 & 1 & -1 & -1 & 1 & 0 \\ 0 & 0 & 1 & -2 & -4 & -1 \end{bmatrix}$ Performing the operation $R_2 + R_3 \rightarrow R_2$, we get the following: $\begin{bmatrix} 1 & -1 & 0 & 1 & 0 & 0 \\ 0 & 1 & 0 & -3 & -3 & -1 \\ 0 & 0 & 1 & 2 & 4 & 1 \end{bmatrix}$ Performing the operation $R_1 + R_1 \rightarrow R_1$, we get the following: $\begin{bmatrix} 1 & 0 & 0 & -2 & -3 & -1 \\ 0 & 1 & 0 & -3 & -3 & -1 \\ 0 & 0 & 1 & 2 & 4 & 1 \end{bmatrix}$ Since the above adjoined matrix is in the form $\mathbf{I}\textbf{A}^{-1}$, we are done Therefore, $\textbf{A}^{-1} = \begin{bmatrix} -2 & -3 & -1 \\ -3 & -3 & -1 \\ 2 & 4 & 1 \end{bmatrix}$ |

|

Orthogonal Matrix

A matrix $\textbf{A}$ that has the property $\textbf{A}^T\textbf{A} = \textbf{A}\textbf{A}^T = \textbf{I}$. This also means, $\textbf{A}^T = \textbf{A}^{-1}$.

The following is an example of a 3 x 3 orthogonal matrix:

$\begin{bmatrix} 1 & 0 & 0 \\ 0 & -1 & 0 \\ 0 & 0 & 1 \end{bmatrix}$

Determinant of Matrix

The Determinant of a square matrix $\textbf{A}$, denoted as $det(\textbf{A})$ or $\lvert \textbf{A} \rvert$, is a scalar (a single real number) that provides some insights about the matrix.

Note that the determinant of a matrix is defined ONLY for a square matrix.

For a matrix $\textbf{A}$, if the determinant $det(\textbf{A}) = 0$, then it implies that the matrix $\textbf{A}$ is a Singular matrix (meaning the matrix has columns or rows that are linearly dependent) and that the matrix is NOT invertible (meaning $\textbf{A}^{-1}$ does not exist).

The determinant of a 2 x 2 matrix $\textbf{A} = \begin{bmatrix} a & b \\ c & d \end{bmatrix}$ can be computed as: $det(\textbf{A}) = ad - bc$.

Consider the following 2 x 2 matrix:

$\textbf{A} = \begin{bmatrix} -2 & 1 \\ -4 & 2 \end{bmatrix}$

The determinant $det(\textbf{A}) = (-2).2 - 1.(-4) = -4 + 4 = 0$

Notice how the first column of the matrix $\textbf{A}$ is a multiple of the second column. This implies that the columns of the matrix $\textbf{A}$ is linearly dependent (or matrix $\textbf{A}$ is a singular matrix).

Now, consider the following 2 x 2 matrix:

$\textbf{B} = \begin{bmatrix} 2 & -3 \\ 1 & 2 \end{bmatrix}$

The determinant $det(\textbf{B}) = 2.2 - (-3).1 = 4 + 3 = 7$

To find the determinant of a higher dimension matrix, we use a recursive approach.

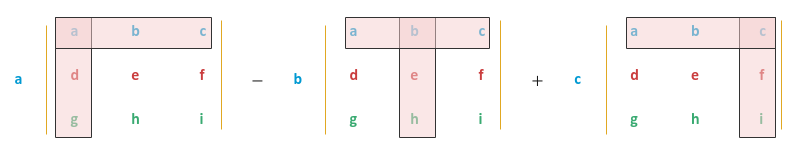

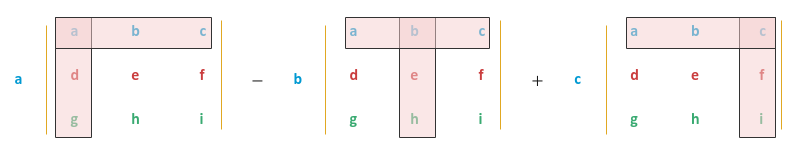

Consider the 3 x 3 matrix $\textbf{A} = \begin{bmatrix} a & b & c \\ d & e & f \\ g & h & i \end{bmatrix}$.

The determinant $det(\textbf{A}) = a \begin{vmatrix} e & f \\ h & i \end{vmatrix} - b \begin{vmatrix} d & f \\ g & i \end{vmatrix} + c \begin{vmatrix} d & e \\ g & h \end{vmatrix}$

That is, $det(\textbf{A}) = a (ei - fh) - b (di - fg) + c (dh - eg)$

The following is a pictorial illustration for finding the determinant of a 3 x 3 matrix:

Now, consider the 4 x 4 matrix $\textbf{B} = \begin{bmatrix} a & b & c & d \\ e & f & g & h \\ i & j & k & l \\ m & n & o & p \end{bmatrix}$.

The determinant $det(\textbf{B}) = a \begin{vmatrix} f & g & h \\ j & k & l \\ n & o & p \end{vmatrix} - b \begin{vmatrix} e & g & h \\ i & k & l \\ m & o & p \end{vmatrix} + c \begin{vmatrix} e & f & h \\ i & j & l \\ m & n & p \end{vmatrix} - d \begin{vmatrix} e & f & g \\ i & j & k \\ m & n & o \end{vmatrix}$

That is, $det(\textbf{B}) = a [f (kp - lo) - g (jp - ln) + h (jo - kn)] - b [e (kp - lo) - g (ip - lm) + h (io - km)] + c [e (jp - ln) - f (ip - lm) + h (in - jm)] - d [e (jo - kn) - f (io - km) + g (in - jm)]$

Notice that the sign of the successive determinants of the sub-matrices alternates: -ve, +ve, -ve, and so on.

Let us look at an example for a 3 x 3 matrix.

| Example-6 | Find the determinant of the matrix: $\textbf{A} = \begin{bmatrix} 0 & 2 & 1 \\ 3 & -1 & 2 \\ 4 & 0 & 1 \end{bmatrix}$ |

|---|---|

|

Given $\textbf{A} = \begin{bmatrix} 0 & 2 & 1 \\ 3 & -1 & 2 \\ 4 & 0 & 1 \end{bmatrix}$ The determinant $det(\textbf{A}) = 0.\begin{vmatrix} -1 & 2 \\ 0 & 1 \end{vmatrix} - 2.\begin{vmatrix} 3 & 2 \\ 4 & 1 \end{vmatrix} + 1.\begin{vmatrix} 3 & -1 \\ 4 & 0 \end{vmatrix}$ That is, $det(\textbf{A}) = 0.((-1).1 - 2.0) - 2.(3.1 - 2.4) + 1.(3.0 - (-1).4) = 0 - 2.(-5) + 1.(4) = 14$ Therefore, $det(\textbf{A}) = 14$ |

|

References