| PolarSPARC |

Basics of Natural Language Processing using NLTK

| Bhaskar S | 01/22/2023 |

Overview

Natural Language Processing (or NLP for short) is related to the processing and analysis of the unstructured language interactions between the computer systems and the humans, with the intent of building interactive real-world applications that can interpret and understand the context of those unstructured language interactions and react accordingly.

The following are some of the common use-cases for NLP:

Customer Service Chatbots

Customer Sentiment Analysis

Semantic Search Engines

Spam Detection

Task Oriented Virtual Assistants

Natural Language Toolkit (or NLTK for short) is one of the popular Python based open source natural language processing libraries for performing various language processing tasks.

Installation and Setup

Installation is assumed to be on a Linux desktop running Ubuntu 22.04 LTS using the user alice. Also, it assumed is that Python 3 is setup in a Virtual Environment.

Open a Terminal window in which we will excute the various commands.

Let us first create a directory named /home/alice/nltk_data by executing the following command in the Terminal:

$ mkdir -p $HOME/nltk_data

Now, to install few of the Python packages - the nltk, the jupyter, the scikit-learn, and the wordcloud packages, execute the following commands in the Terminal:

$ pip install nltk

$ pip install jupyter

$ pip install scikit-learn

$ pip install wordcloud

Next, we need to download and setup all the NLTK provided data packages.

Execute the following command in the Terminal:

$ python

The prompt will change to >>> indicating the Python REPL mode.

Execute the following commands in the Python REPL:

>>> import nltk

>>> nltk.download()

The following would be the typical output:

NLTK Downloader

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

The prompt will change to Downloader> indicating the NLTK REPL mode.

Type the letter c and press ENTER in the NLTK REPL as shown below:

Downloader> c

The following would be the typical output:

Data Server: - URL: <https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml> - 7 Package Collections Available - 111 Individual Packages Available Local Machine: - Data directory: /usr/local/share/nltk_data

The prompt will change to Config> indicating the NLTK Config REPL mode.

Type the letter d and press ENTER in the NLTK Config REPL as shown below:

Config> d

The prompt will change to New Directory>, where we will type the NLTK data directory and press ENTER shown below:

New Directory> /home/alice/nltk_data

The prompt will change to Config> indicating the NLTK Config REPL mode.

Type the letter m and press ENTER in the NLTK Config REPL as shown below:

Config> m

The following would be the typical output:

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

The prompt will change to Downloader> indicating the NLTK REPL mode.

Type the letter l and press ENTER in the NLTK REPL as shown below:

Downloader> l

The following would be the typical output:

Packages:

[ ] abc................. Australian Broadcasting Commission 2006

[ ] alpino.............. Alpino Dutch Treebank

[ ] averaged_perceptron_tagger Averaged Perceptron Tagger

[ ] averaged_perceptron_tagger_ru Averaged Perceptron Tagger (Russian)

[ ] basque_grammars..... Grammars for Basque

[ ] biocreative_ppi..... BioCreAtIvE (Critical Assessment of Information

Extraction Systems in Biology)

[ ] bllip_wsj_no_aux.... BLLIP Parser: WSJ Model

[ ] book_grammars....... Grammars from NLTK Book

[ ] brown............... Brown Corpus

[ ] brown_tei........... Brown Corpus (TEI XML Version)

[ ] cess_cat............ CESS-CAT Treebank

[ ] cess_esp............ CESS-ESP Treebank

[ ] chat80.............. Chat-80 Data Files

[ ] city_database....... City Database

[ ] cmudict............. The Carnegie Mellon Pronouncing Dictionary (0.6)

[ ] comparative_sentences Comparative Sentence Dataset

[ ] comtrans............ ComTrans Corpus Sample

[ ] conll2000........... CONLL 2000 Chunking Corpus

[ ] conll2002........... CONLL 2002 Named Entity Recognition Corpus

Hit Enter to continue:

Press ENTER each time to page through the various NLTK packages. In the end one would end up in an output as show below:

Collections:

[ ] all-corpora......... All the corpora

Hit Enter to continue:

[ ] all-nltk............ All packages available on nltk_data gh-pages

branch

[ ] all................. All packages

[ ] book................ Everything used in the NLTK Book

[ ] popular............. Popular packages

[ ] tests............... Packages for running tests

[ ] third-party......... Third-party data packages

([*] marks installed packages)

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Type the letter d and press ENTER in the NLTK REPL as shown below:

Downloader> d

The following would be the typical output:

-Download which package (l=list; x=cancel)?

The prompt will change to Identifier> indicating the NLTK Download REPL mode.

Type the word all and press ENTER in the NLTK Download REPL as shown below:

Identifier> all

This will download and setup of all the NLTK data packages and in the end the following would be the typical output:

Downloading collection 'all'

|

| Downloading package abc to /home/alice/nltk_data...

| Unzipping corpora/abc.zip.

| Downloading package alpino to /home/alice/nltk_data...

| Unzipping corpora/alpino.zip.

| Downloading package averaged_perceptron_tagger to /home/alice/nltk_data...

| Unzipping taggers/averaged_perceptron_tagger.zip.

... SNIP ...

| Downloading package wordnet_ic to /home/alice/nltk_data...

| Unzipping corpora/wordnet_ic.zip.

| Downloading package words to /home/alice/nltk_data...

| Unzipping corpora/words.zip.

| Downloading package ycoe to /home/alice/nltk_data...

| Unzipping corpora/ycoe.zip.

|

Done downloading collection all

---------------------------------------------------------------------------

d) Download l) List u) Update c) Config h) Help q) Quit

---------------------------------------------------------------------------

Type the letter q and press ENTER in the NLTK REPL as shown below:

Downloader> q

The prompt will change to >>> indicating the Python REPL mode.

Execute the following command in the Python REPL to exit:

>>> exit()

We are now DONE with the installation and setup process.

Hands-on NLTK

All the commands in this hands-on section will be using the Jupyter notebook.

To launch the Jupyter Notebook, execute the following command in the Terminal:

$ jupyter notebook

To load the nltk Python module, run the following statement in the Jupyter cell:

import nltk

To set the correct path to the nltk data packages, run the following statement in the Jupyter cell:

nltk.data.path.append("/home/alice/nltk_data")

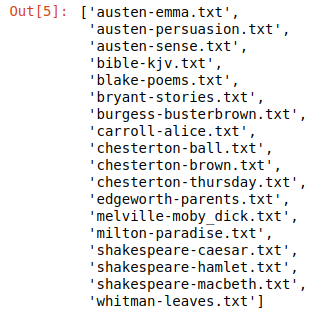

To list all the text from Project Gutengerg included in nltk, run the following statement in the Jupyter cell:

nltk.corpus.gutenberg.fileids()

The following would be a typical output:

Tokenization is the process of breaking a text into either its sentences or its words. For example, the text Alice in the Wonderland is tokenized into a list of words - ['Alice', 'in', 'the', 'Wonderland'].

To load and get all the words from the text Alice in the Wonderland included in nltk, run the following statement in the Jupyter cell:

words = nltk.corpus.gutenberg.words('carroll-alice.txt')

In this demo, we will use a custom text file charles-christmas_carol.txt for The Christmas Carol from the Project Gutenberg.

To load and tokenize all the words from the custom text charles-christmas_carol.txt to the lower case, run the following statements in the Jupyter cell:

from nltk.tokenize import word_tokenize

with open ('txt_data/charles-christmas_carol.txt') as fcc:

all_tokens = word_tokenize(fcc.read().lower())

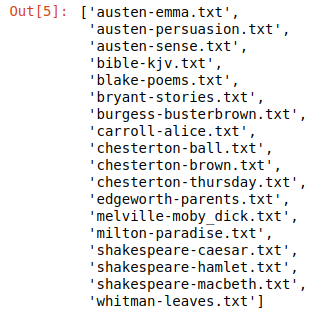

Stop Words are the commonly used filler words (such as 'a', 'and', 'on', etc) that add little value to the context of the text.

To display all the Stop Words from the English language defined in nltk, run the following statements in the Jupyter cell:

from nltk.corpus import stopwords

stop_words = stopwords.words('english')

stop_words

The following would be a typical output showing an initial subset of entries:

Normalization is the process of cleaning the text to make it more uniform, such as removing the punctuations and the Stop Words.

To remove all the punctuations from our custom text, run the following statement in the Jupyter cell:

cc_tokens = [word for word in all_tokens if word.isalpha()]

To remove all the Stop Words from our custom text, run the following statement in the Jupyter cell:

all_words = [word for word in cc_tokens if word not in stop_words]

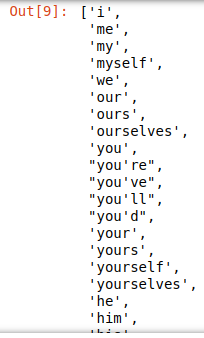

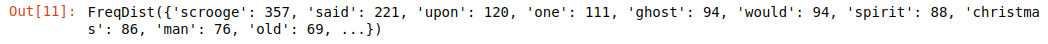

Frequency Distribution is the count of each of the words from a text.

To display the Frequency Distribution of the words from our custom text, run the following statements in the Jupyter cell:

all_fd = nltk.FreqDist(all_words)

all_fd

The following would be a typical output showing a subset of entries:

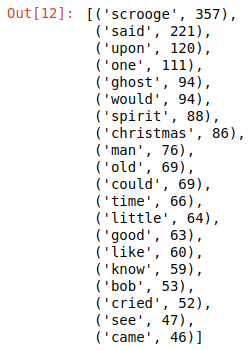

To display the 20 most commonly used words from our custom text, run the following statement in the Jupyter cell:

all_fd.most_common(20)

The following would be a typical output:

Hapaxes are the unique words that occur only once in the entire text.

To display all the Hapaxes from our custom text, run the following statements in the Jupyter cell:

single_words = all_fd.hapaxes()

single_words

The following would be a typical output showing an initial subset of entries:

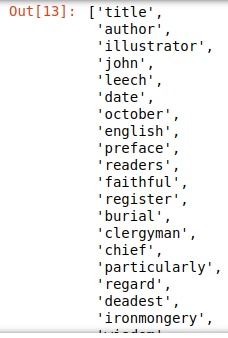

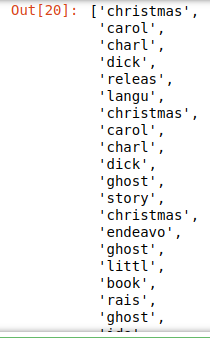

Stemming is the process of reducing words to their root forms. For example, the words walked and walking will be reduced to the root word walk.

To create and display the root words for all the words from our custom text, run the following statements in the Jupyter cell:

lancaster = nltk.LancasterStemmer()

cc_stems = [lancaster.stem(word) for word in cc_words]

cc_stems

The following would be a typical output showing an initial subset of entries:

Parts of Speech is part of a language grammer and indicates the role of a word in the text.

The English language has 8 parts of speech, which are as follows:

| Part of Speech | Role | Example |

|---|---|---|

| Noun | A person, place, or thing | Alice, New York, Apple |

| Pronoun | Replaces a Noun | He, She, You |

| Adjective | Describes a Noun | Big, Colorful, Great |

| Verb | An action or state of being | Can, Go, Learn |

| Adverb | Describes a Verb, Adjective, or another Adverb | Always, Silently, Very |

| Preposition | Links a Noun or Pronoun to another word | At, From, To |

| Conjunction | Joins words or phrases | And, But, So |

| Interjection | An Exclamation | Oh, Ouch, Wow |

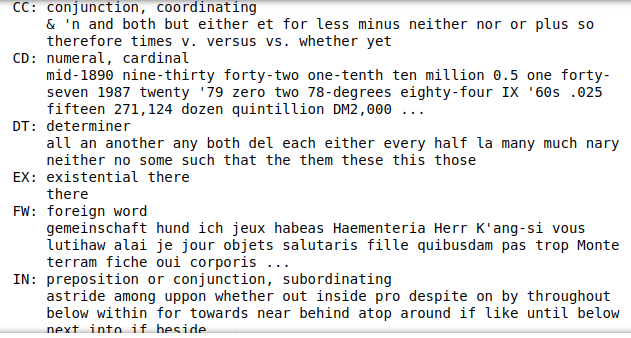

To display all the abbreviations of the different Parts of Speech from the English language defined in nltk, run the following statement in the Jupyter cell:

nltk.help.upenn_tagset()

The following would be a typical output showing a subset of entries:

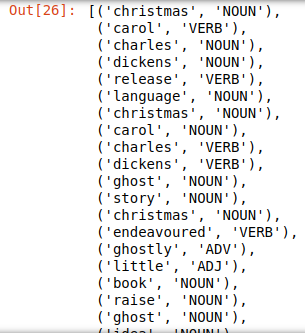

To determine and display the Parts of Speech for all the words from our custom text, run the following statements in the Jupyter cell:

cc_pos2 = nltk.pos_tag(cc_words, tagset='universal')

cc_pos2

The following would be a typical output showing an initial subset of entries:

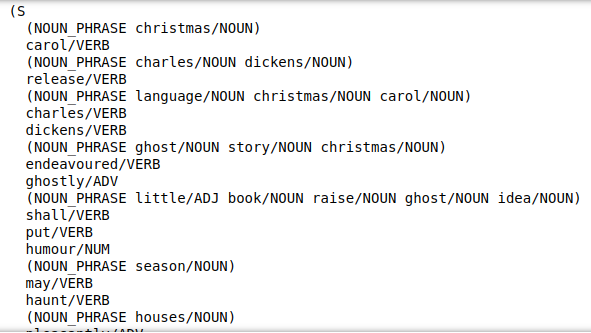

Chunking is the process of identifying groups of words from a text that make sense to be together, such as, the noun phrases or verb phrases, etc. For example, given the text 'Alice likes to travel to New York City', the word Tokenization will split the words 'New', 'York', and 'City'. With Chunking they will be grouped together, which makes more sense.

Chunking works on the words after they have been tagged with their corresponding Parts of Speech.

To determine and display the noun phrases for all the words (with their Parts of Speech tagged) from our custom text, run the following statements in the Jupyter cell:

parser = nltk.RegexpParser(r'NOUN_PHRASE: {

cc_chunks = parser.parse(cc_pos2)

print(cc_chunks)

The following would be a typical output showing an initial subset of entries:

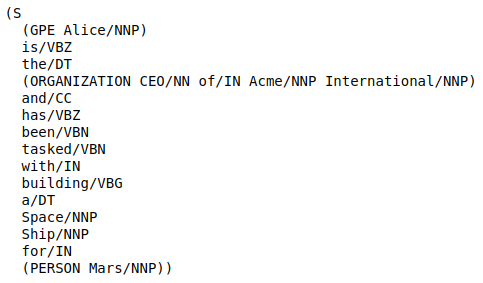

Named Entity Recognition is the process of identifying named entities such as people, locations, organizations, etc.

Like Chunking, the Named Entity Recognition works on the words after they have been tagged with their corresponding Parts of Speech.

To determine and display the named entities for a given simple text, run the following statements in the Jupyter cell:

text = 'Alice is the CEO of Acme International and has been tasked with building a Space Ship for Mars'

words = nltk.word_tokenize(text)

tags = nltk.pos_tag(words)

ner = nltk.ne_chunk(tags)

print(ner)

The following would be a typical output showing all of the entries:

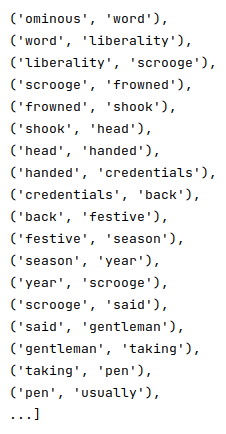

Until now, we have been processing words one at a time (referred to as Unigrams). Sometimes we may have to process words two at a time (referred to as Bigrams) or words three at a time (referred to as Trigrams) to extract the intent of the text. A more generic way of processing n-words at a time is referred to as n-grams.

To find and display the bigrams for from our custom text, run the following statements in the Jupyter cell:

from nltk.util import ngrams

all_bigrams = list(ngrams(all_words, 2))

all_bigrams

The following would be a typical output showing an initial subset of entries:

The number argument in the ngrams(all_words, 2) indicates the number of words to consider at a time.

References