| PolarSPARC |

Hands-on with eBPF

| Bhaskar S | 01/16/2022 |

Introduction

In large Enterprises that run an ecosystem of complex applications, it is a team of unsung heros that monitor, manage, and secure the application ecosystem to ensure smooth delivery of services to our end clients. The different components of the application ecosystem, the compute, the storage, the network, and the various application modules, are typically monitored and observed by different monitoring tools and it is the events, logs, and metrics from those monitoring tools that aid our unsung heros in keeping a close eye on the application ecosystem. Now, imagine our unsung heros are enabled with additional magical super powers, that allows them to trace, observe, monitor, and detect issues with any application stack, front to back, all the way through to the infrastructure components (including the OS), without installing any additional tool(s). Does this sound interesting and magical ???

Well, that magical super power comes from using the Extended Berkeley Packet Filter (or eBPF).

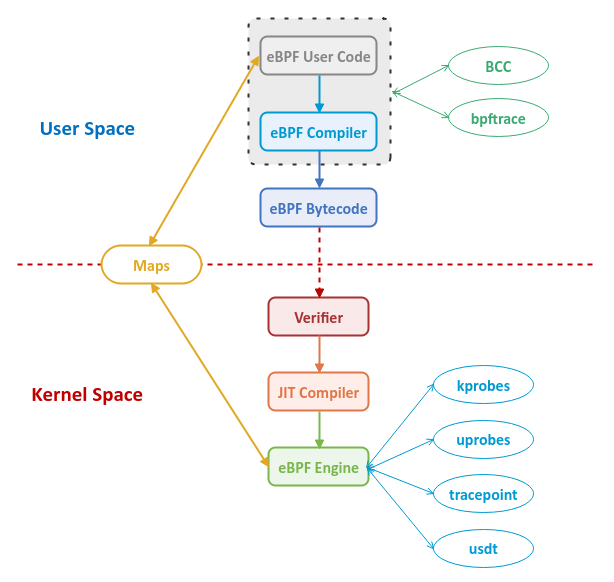

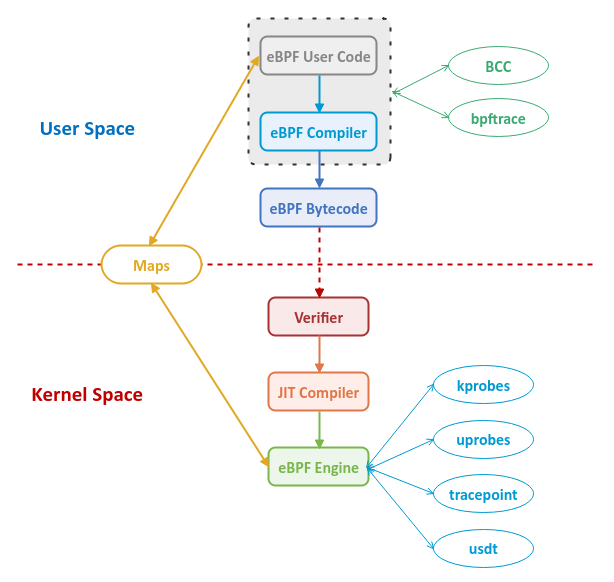

Berkeley Packet Filter (or BPF) was initially developed in 1992 for capturing and processing network packets in the user space. It was built in a way to use zero-data copy between the kernel space and the user space. The processing of the packets were performed by custom user code (called filters) written using the BPF instruction set, which was compiled and injected as bytecode into the kernel. The bytecode is then verified and executed by a secure register based virtual machine (runtime) in the kernel.

In 2013, enhancements were made to the BPF instruction set to take advantage of the modern hardware features and additional hooks were added into the kernel to generate various events so that BPF can attach to these events to support tracing (also referred to as snooping) as well as sampling (also referred to as profiling). These enhancements extended the usefulness of BPF to other aspects such as debugging, monitoring, performance analysis, etc., in addition to network packet filtering. In addition, the BPF just-in-time (JIT) compiler and the BPF runtime virtual machine were optimized for performance. With these enhancements in functionality, the new BPF capability was rebranded as Extended Berkeley Packet Filter (or eBPF) and was added to Linux in 2014.

Think of the custom eBPF user code running in the kernel analogous to Javascript running in a browser. User writes custom Javascript code, which is executed by the browser based on the different user actions/events such as clicking a button, etc. Similarly, eBPF allows users to write custom code, which gets executed in kernel space in a secure and restricted sandbox environment, without the need for writing kernel module programs or recompiling the kernel code.

In other words, an eBPF user code that is attached to a eBPF hook interception point along a certain code path in the kernel is executed, when an event generated by the kernel passes through that code path in the kernel.

The Linux kernel has enabled eBPF interception hooks at various points in the kernel (referred to as event sources) in order to enable tracing and sampling via instrumentation. The supported event sources are as follows:

kprobes :: enables dynamic tracing of the kernel functions and is often used to instrument the entry and exit of kernel functions

The uprobes :: enables dynamic tracing of the user (application and library) level functions and is often used to instrument the entry and exit of user (application) level functions

Dynamic probes are subject to *CHANGE* in the future releases, so use with *CAUTION*.

The tracepoint :: enables static tracing of the kernel functions

The usdt :: enables static tracing of the user (application) level functions

Static probes are well defined and maintained in all releases, so should be the preferred *CHOICE*.

The eBPF instruction set is simpler and much restricted in terms of features. In other words, it does NOT support structure types, loops, functions with variable arguments, etc., so one cannot write complex code that can corrupt or crash the kernel.

The eBPF kernel code verifier perform various code checks to ensure there are no security or stability issues with the user supplied code to run in the kernel. The code checks are performed before the user supplied eBPF code is loaded in the kernel.

How does an application in the user space connect to the eBPF operations in the kernal space ??? Well, it is via a system call called bpf() that is used for all eBPF related operations such as, loading the custom code, attaching the custom code to certain kernel event(s), creating eBPF storage data structures and accessing the contents in those storage data structures, etc. Only users with the CAP_SYS_ADMIN Linux capability can invoke the bpf() syscall. Hence, we typically run the eBPF tools or the eBPF enabled applications as the root user.

How does an application in the user space and the eBPF code in the kernel space share data between them ??? Well, this is where Maps come into play. Maps are generic data structures for storage of different types of data such as, arrays, key-value hash tables, etc.

Just like programming with x86 assembly language is hard and tedious, so is writing user code using the eBPF instruction set. Instead, the following two higher level abstractions approaches have been developed to ease the writing of eBPF code:

BPF Compiler Collection :: (or BCC) is a restricted C like programming environment for writing eBPF user code using the higher level programming languages like C++, Go, Python, etc.

The bpftrace :: provides a higher level abstraction for writing powerful one-liner scripts using a special high level eBPF language that is similar to awk

Now that we know of the different aspects of eBPF, the following diagram depicts the high level view of the eBPF ecosystem:

Installation

The setup will be on a Ubuntu 20.04 LTS based Linux desktop.

Open a terminal window and execute the following command:

$ sudo apt install bpfcc-tools bpftrace -y

The following would be a typical output:

Reading package lists... Done Building dependency tree Reading state information... Done The following packages were automatically installed and are no longer required: libhal1-flash libllvm10 libllvm10:i386 libllvm11 libllvm11:i386 libxdamage1:i386 mint-backgrounds-ulyssa mint-backgrounds-uma Use 'sudo apt autoremove' to remove them. The following additional packages will be installed: libbpfcc libclang1-9 python3-bpfcc The following NEW packages will be installed: bpfcc-tools bpftrace libbpfcc libclang1-9 python3-bpfcc 0 upgraded, 5 newly installed, 0 to remove and 0 not upgraded. Need to get 22.7 MB of archives. After this operation, 95.2 MB of additional disk space will be used. Get:1 http://archive.ubuntu.com/ubuntu focal/universe amd64 libbpfcc amd64 0.12.0-2 [14.9 MB] Get:2 http://archive.ubuntu.com/ubuntu focal/universe amd64 python3-bpfcc all 0.12.0-2 [31.3 kB] Get:3 http://archive.ubuntu.com/ubuntu focal/universe amd64 bpfcc-tools all 0.12.0-2 [579 kB] Get:4 http://archive.ubuntu.com/ubuntu focal/universe amd64 libclang1-9 amd64 1:9.0.1-12 [6,684 kB] Get:5 http://archive.ubuntu.com/ubuntu focal/universe amd64 bpftrace amd64 0.9.4-1 [457 kB] Fetched 22.7 MB in 1s (15.4 MB/s) Selecting previously unselected package libbpfcc. (Reading database ... 447751 files and directories currently installed.) Preparing to unpack .../libbpfcc_0.12.0-2_amd64.deb ... Unpacking libbpfcc (0.12.0-2) ... Selecting previously unselected package python3-bpfcc. Preparing to unpack .../python3-bpfcc_0.12.0-2_all.deb ... Unpacking python3-bpfcc (0.12.0-2) ... Selecting previously unselected package bpfcc-tools. Preparing to unpack .../bpfcc-tools_0.12.0-2_all.deb ... Unpacking bpfcc-tools (0.12.0-2) ... Selecting previously unselected package libclang1-9. Preparing to unpack .../libclang1-9_1%3a9.0.1-12_amd64.deb ... Unpacking libclang1-9 (1:9.0.1-12) ... Selecting previously unselected package bpftrace. Preparing to unpack .../bpftrace_0.9.4-1_amd64.deb ... Unpacking bpftrace (0.9.4-1) ... Setting up libclang1-9 (1:9.0.1-12) ... Setting up libbpfcc (0.12.0-2) ... Setting up python3-bpfcc (0.12.0-2) ... Setting up bpftrace (0.9.4-1) ... Setting up bpfcc-tools (0.12.0-2) ... Processing triggers for man-db (2.9.1-1) ... Processing triggers for libc-bin (2.31-0ubuntu9.2) ...

Hands-on eBPF

For the following sections, we will need two terminal windows for executing the various commands - we will refer to them as T1 and T2 respectively.

BCC Tools

BCC, by default, comes bundled with a set of pre-built tools to observe and measure the various aspects of the system. We will only look at some of the pre-built tools in this article.

Enterprise applications use some form of data persistence and this implies there is storage disk I/O involved. Many a times the storage disk I/O contributes to application latencies and therefore is one of the important components to look at during performance analysis. The biolatency-bpfcc tool traces the disk I/O, records the distribution of disk I/O latencies, and displays them as a histogram.

The following is the Go code listing StressDisk that will be used to stress the disk by doing random reads and writes on few files on the disk:

/*

* Description: Simple Go program used for Disk I/O stress

* Author: Bhaskar S

* Date: 01/15/2022

* Blog: https://www.polarsparc.com

*/

package main

import (

"log"

"math/rand"

"os"

"sync"

"time"

)

const maxLines = 1024

const maxSize = 4096

const maxCount = 1000000

const maxTasks = 8

const maxSleep = 100

const alphaNumChars = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789"

var alphaNumLen = len(alphaNumChars)

var fileNames = []string{"/tmp/FILE_1.dat", "/tmp/FILE_2.dat", "/tmp/FILE_3.dat", "/tmp/FILE_4.dat"}

func randomString(s int) []byte {

b := make([]byte, s)

for i := 0; i < s; i++ {

b[i] = alphaNumChars[rand.Intn(alphaNumLen)]

}

return b

}

func closeFile(f *os.File) {

err := f.Close()

if err != nil {

log.Println(err)

}

}

func initializeFile(n string) {

log.Printf("===> START: File %s initialize task ...", n)

f, err := os.Create(n)

if err != nil {

log.Fatal(err)

}

defer closeFile(f)

for i := 1; i <= maxLines; i++ {

off := int64(i-1) * maxSize

_, err = f.Seek(off, 0)

if err != nil {

log.Fatal(err)

}

b := randomString(maxSize)

_, err = f.Write(b)

if err != nil {

log.Fatal(err)

}

}

err = f.Sync()

if err != nil {

log.Fatal(err)

}

log.Printf("===> END: File %s initialize task ...", n)

}

func fileReaderTask(n string, wg *sync.WaitGroup) {

log.Printf("===> START: File %s reader task ...", n)

f, err := os.Open(n)

if err != nil {

log.Fatal(err)

}

defer closeFile(f)

for i := 1; i <= maxCount; i++ {

off := int64(rand.Intn(maxLines-1)) * maxSize

_, err = f.Seek(off, 0)

if err != nil {

log.Fatal(err)

}

b := make([]byte, maxSize)

_, err = f.Read(b)

if err != nil {

log.Fatal(err)

}

if i%1000 == 0 {

time.Sleep(maxSleep * time.Millisecond)

}

}

wg.Done()

log.Printf("===> END: File %s reader task ...", n)

}

func fileWriterTask(n string, wg *sync.WaitGroup) {

log.Printf("===> START: File %s writer task ...", n)

f, err := os.OpenFile(n, os.O_RDWR|os.O_SYNC, 0665)

if err != nil {

log.Fatal(err)

}

defer closeFile(f)

for i := 1; i <= maxCount; i++ {

off := int64(rand.Intn(maxLines-1)) * maxSize

_, err = f.Seek(off, 0)

if err != nil {

log.Fatal(err)

}

b := randomString(maxSize)

_, err = f.Write(b)

if err != nil {

log.Fatal(err)

}

if i%1000 == 0 {

time.Sleep(maxSleep * time.Millisecond)

}

}

wg.Done()

log.Printf("===> END: File %s writer task ...", n)

}

func main() {

rand.Seed(1001)

for _, n := range fileNames {

initializeFile(n)

}

time.Sleep(5 * time.Second)

var wg sync.WaitGroup

wg.Add(maxTasks)

for _, n := range fileNames {

go fileReaderTask(n, &wg)

}

for _, n := range fileNames {

go fileWriterTask(n, &wg)

}

wg.Wait()

}

In terminal T1, execute the following command:

$ sudo biolatency-bpfcc 5 50

The above command indicates display the histogram every 5 seconds (interval) and repeat this operation 50 times (count).

The following would be a typical output:

Tracing block device I/O... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 1 |******************** |

64 -> 127 : 2 |****************************************|

128 -> 255 : 0 | |

256 -> 511 : 2 |****************************************|

512 -> 1023 : 0 | |

1024 -> 2047 : 2 |****************************************|

2048 -> 4095 : 2 |****************************************|

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 1 |** |

32 -> 63 : 2 |***** |

64 -> 127 : 3 |******* |

128 -> 255 : 10 |************************* |

256 -> 511 : 0 | |

512 -> 1023 : 16 |****************************************|

1024 -> 2047 : 2 |***** |

2048 -> 4095 : 3 |******* |

In terminal T2, execute the following commands:

$ go build

$ ./StressDisk

The following would be a typical output:

2022/01/15 14:32:08 ===> START: File /tmp/FILE_1.dat initialize task ... 2022/01/15 14:32:08 ===> END: File /tmp/FILE_1.dat initialize task ... 2022/01/15 14:32:08 ===> START: File /tmp/FILE_2.dat initialize task ... 2022/01/15 14:32:08 ===> END: File /tmp/FILE_2.dat initialize task ... 2022/01/15 14:32:08 ===> START: File /tmp/FILE_3.dat initialize task ... 2022/01/15 14:32:08 ===> END: File /tmp/FILE_3.dat initialize task ... 2022/01/15 14:32:08 ===> START: File /tmp/FILE_4.dat initialize task ... 2022/01/15 14:32:09 ===> END: File /tmp/FILE_4.dat initialize task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_2.dat reader task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_4.dat writer task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_3.dat reader task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_2.dat writer task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_1.dat writer task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_3.dat writer task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_1.dat reader task ... 2022/01/15 14:32:14 ===> START: File /tmp/FILE_4.dat reader task ...

With the stress running on terminal T2, the following would be the output on the terminal T1:

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 627 |************* |

32 -> 63 : 1889 |****************************************|

64 -> 127 : 93 |* |

128 -> 255 : 18 | |

256 -> 511 : 10 | |

512 -> 1023 : 1100 |*********************** |

1024 -> 2047 : 14 | |

2048 -> 4095 : 6 | |

4096 -> 8191 : 1 | |

8192 -> 16383 : 2 | |

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 3124 |************* |

32 -> 63 : 9104 |****************************************|

64 -> 127 : 234 |* |

128 -> 255 : 0 | |

256 -> 511 : 2 | |

512 -> 1023 : 5151 |********************** |

1024 -> 2047 : 2 | |

2048 -> 4095 : 25 | |

4096 -> 8191 : 1 | |

Notice how the disk I/O latencies have changed due to the stress.

A given computing system has a certain amount of CPUs that are available for the applications running on them to use. There are situations when there are more number of applications running on the system compete for the available processors OR vice versa. The cpudist-bpfcc tool traces the CPU usage and displays how many tasks used the CPU and for how long as a histogram, which provides valuable insights related to the CPU workloads. More interestingly, one can use this tool to target a specific process on the system to understand its CPU usage.

The following is the Go code listing StressCPU that will be used to stress the CPU by doing factorial computations using big integers:

/*

* Description: Simple Go program used for CPU stress

* Author: Bhaskar S

* Date: 01/15/2022

* Blog: https://www.polarsparc.com

*/

package main

import (

"log"

"math/big"

"sync"

)

const maxCount = 50000

const maxNum = 2500

const maxTasks = 16

func bigIntegerFactorial(id int, wg *sync.WaitGroup) {

log.Printf("===> START: CPU task %d ...", id)

for i := 1; i <= maxCount; i++ {

bi := big.NewInt(1)

for j := 1; j <= maxNum; j++ {

bi.Mul(bi, big.NewInt(int64(j)))

}

}

log.Println("===> END: CPU task %d ...", id)

wg.Done()

}

func main() {

var wg sync.WaitGroup

wg.Add(maxTasks)

for i := 1; i <= maxTasks; i++ {

go bigIntegerFactorial(i, &wg)

}

wg.Wait()

}

In terminal T2, execute the following commands:

$ go build

$ ./StressCPU

The following would be a typical output:

2022/01/15 15:13:12 ===> START: CPU task 16 ... 2022/01/15 15:13:12 ===> START: CPU task 4 ... 2022/01/15 15:13:12 ===> START: CPU task 1 ... 2022/01/15 15:13:12 ===> START: CPU task 3 ... 2022/01/15 15:13:12 ===> START: CPU task 6 ... 2022/01/15 15:13:12 ===> START: CPU task 2 ... 2022/01/15 15:13:12 ===> START: CPU task 7 ... 2022/01/15 15:13:12 ===> START: CPU task 5 ... 2022/01/15 15:13:12 ===> START: CPU task 9 ... 2022/01/15 15:13:12 ===> START: CPU task 12 ... 2022/01/15 15:13:12 ===> START: CPU task 10 ... 2022/01/15 15:13:12 ===> START: CPU task 14 ... 2022/01/15 15:13:12 ===> START: CPU task 8 ... 2022/01/15 15:13:12 ===> START: CPU task 13 ... 2022/01/15 15:13:12 ===> START: CPU task 11 ... 2022/01/15 15:13:12 ===> START: CPU task 15 ...

In terminal T1, execute the following command:

$ PID=`ps -fu polarsparc | grep StressCPU | grep -v grep | awk '{ print $2 }'`; sudo cpudist-bpfcc -p $PID 5 50

The -p option in the above command is more specifically targetting the StressCPU java process on the system.

The following would be a typical output:

Tracing on-CPU time... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 731 |******************** |

4 -> 7 : 845 |*********************** |

8 -> 15 : 222 |****** |

16 -> 31 : 291 |******** |

32 -> 63 : 431 |*********** |

64 -> 127 : 1444 |****************************************|

128 -> 255 : 859 |*********************** |

256 -> 511 : 1012 |**************************** |

512 -> 1023 : 1311 |************************************ |

1024 -> 2047 : 181 |***** |

2048 -> 4095 : 164 |**** |

4096 -> 8191 : 19 | |

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 680 |******************** |

4 -> 7 : 821 |************************ |

8 -> 15 : 224 |****** |

16 -> 31 : 230 |****** |

32 -> 63 : 412 |************ |

64 -> 127 : 1213 |************************************ |

128 -> 255 : 897 |*************************** |

256 -> 511 : 947 |**************************** |

512 -> 1023 : 1319 |****************************************|

1024 -> 2047 : 160 |**** |

2048 -> 4095 : 147 |**** |

4096 -> 8191 : 19 | |

Often times, system adminstrators monitor system(s) to monitor what proceses are being started on system(s). The execsnoop-bpfcc tool monitors and displays any new process execution on the system. It can help identify any unexpected process(es) that are starting and impacting the system.

In terminal T1, execute the following command:

$ sudo execsnoop-bpfcc -T

The -T option in the above command indicates that the output include the time in HH:MM:SS format.

The following would be a typical output:

TIME PCOMM PID PPID RET ARGS

In terminal T2, execute the following commands one after the other:

$ clear

$ ping -c 3 google.com

The following would be the output on the terminal T1:

15:29:51 clear 37289 26765 0 /usr/bin/clear 15:30:03 ping 37292 26765 0 /usr/bin/ping -c 3 google.com

The opensnoop-bpfcc tool monitors and displays any new files opened (along with the full path and other information) on the system. One can use this tool on a specific process to understand what files are being opened.

In terminal T2, execute the following commands:

$ ./StressDisk

The results would be similar to that of the Output.3 from above.

In terminal T1, execute the following command:

$ PID=`ps -fu polarsparc | grep StressDisk | grep -v grep | awk '{ print $2 }'`; sudo opensnoop-bpfcc -T -p $PID

The following would be a typical output:

7.888828000 43563 StressDisk 68 0 /tmp/FILE_1.dat 7.889653000 43563 StressDisk 69 0 /tmp/FILE_4.dat 7.890878000 43563 StressDisk 70 0 /tmp/FILE_3.dat 7.891787000 43563 StressDisk 71 0 /tmp/FILE_2.dat 7.892661000 43563 StressDisk 72 0 /tmp/FILE_4.dat 7.893302000 43563 StressDisk 73 0 /tmp/FILE_3.dat 7.894574000 43563 StressDisk 74 0 /tmp/FILE_2.dat 7.895419000 43563 StressDisk 75 0 /tmp/FILE_1.dat

The tcplife-bpfcc tool monitors and displays TCP network connections that open and/or close. One can use it to identify short-leved connections that come and go.

The following is the Go code listing DemoHttpServer that will be used to start a HTTP server on port 8080:

/*

* Description: Simple Go HTTP server

* Author: Bhaskar S

* Date: 01/15/2022

* Blog: https://www.polarsparc.com

*/

package main

import (

"fmt"

"log"

"net"

"net/http"

)

func indexHandler(w http.ResponseWriter, _ *http.Request) {

log.Println("===> Received HTTP request on root")

_, err := fmt.Fprintf(w, "Hello eBPF !!!")

if err != nil {

log.Fatal(err)

}

}

func main() {

addrs, err := net.InterfaceAddrs()

if err != nil {

log.Fatal(err)

}

var host *net.IPNet

for _, a := range addrs {

if ip, ok := a.(*net.IPNet); ok && !ip.IP.IsLoopback() {

if ip.IP.To4() != nil {

host = ip

break

}

}

}

http.HandleFunc("/", indexHandler)

log.Printf("===> Starting DemoHttpServer at %s:8080", host.IP.String())

err = http.ListenAndServe(host.IP.String()+":8080", nil)

if err != nil {

log.Fatal(err)

}

}

In terminal T1, execute the following command:

$ sudo tcplife-bpfcc -T

The -T option in the above command indicates that the output include the time in HH:MM:SS format.

The following would be a typical output:

TIME PID COMM LADDR LPORT RADDR RPORT TX_KB RX_KB MS

In terminal T2, execute the following commands:

$ go build

$ ./DemoHttpServer

The following would be a typical output:

2022/01/15 20:10:26 ===> Starting DemoHttpServer at 192.168.1.53:8080

Open another new terminal and execute the following commands:

$ curl http://127.0.0.1:8080/

$ curl http://192.168.1.51:8080/

The following would be the output on the terminal T1:

20:10:35 45585 curl 127.0.0.1 52108 127.0.0.1 8080 0 0 0.06 20:10:39 45586 curl 192.168.1.53 40932 192.168.1.53 8080 0 0 52.31

bpftrace

bpftrace is a high level scripting langauge that leverages the native LLVM compiler as a backend to compile the scripts to eBPF bytecode and makes use of BCC library to interface with eBPF. We will look only at few scripts in this article.

To get a list of all the probes supported by bpftrace, execute the following command in terminal T1:

$ sudo bpftrace -l

The typical output would be very long and hence we do not show it here. In the following examples, we will focus primarily on some of the system calls.

For the first example, we desire to trace all the files that are opened by a given process. When any file is opened, they ultimately have to go through the eBPF hook (tracepoint) call sys_enter_openat. We know that most of the Linux kernel system calls have a well defined static tracepoint hooks. To find the details of the arguments of this system call tracepoint, execute the following command in terminal T1:

$ sudo bpftrace -v -l 'tracepoint:syscalls:sys_enter_openat'

The following would be the typical output:

tracepoint:syscalls:sys_enter_openat int __syscall_nr; int dfd; const char * filename; int flags; umode_t mode;

From the Output.13 above, we can identify the name of the resource through filename.

We will chose to trace the Ubuntu software updater process called mintUpdate. The PID for this process was 2421.

In terminal T1, execute the following command:

$ sudo bpftrace -e 'tracepoint:syscalls:sys_enter_openat /pid == 2421/ { printf("%s %s\n", comm, str(args->filename)); }'

The probe tracepoint:syscalls:sys_enter_openat in the above command tracks all files being opened.

The filter /pid == 2421/ only applies to our chosen process. Without this filter, the probe would apply to the entire system.

The builtin variable comm points to the process name. The argument args points to the argument structure of the specific system call. The method str() converts the provided input into a string.

Launch the software update process on the desktop and the following would be the output on the terminal T1:

mintUpdate /home/polarsparc/.icons/default/cursors/watch mintUpdate /home/polarsparc/.icons/default/index.theme mintUpdate /usr/share/icons/default/cursors/watch mintUpdate /usr/share/icons/default/index.theme mintUpdate /usr/share/pixmaps/default/cursors/watch mintUpdate /home/polarsparc/.icons/DMZ-White/cursors/watch mintUpdate /home/polarsparc/.icons/DMZ-White/index.theme mintUpdate /usr/share/icons/DMZ-White/cursors/watch mintUpdate /run/user/1000/dconf/user mintUpdate /home/polarsparc/.config/dconf/user mintUpdate /home/polarsparc/.linuxmint/mintupdate/updates.json mintUpdate /etc/apt/sources.list.d/official-package-repositories.list

For the second example, we want to trace all the process(es) created along with their arguments. Any process executed in the terminal have to go through the the eBPF hook (tracepoint) call sys_enter_execve. To find the details of the arguments of this system call tracepoint, execute the following command in terminal T1:

$ sudo bpftrace -v -l 'tracepoint:syscalls:sys_enter_execve'

The following would be the typical output:

tracepoint:syscalls:sys_enter_execve int __syscall_nr; const char * filename; const char *const * argv; const char *const * envp;

From the Output.15 above, we can identify the name of the process launched through filename and get its arguments via argv.

In terminal T1, execute the following command:

$ sudo bpftrace -e 'tracepoint:syscalls:sys_enter_execve { printf("%s => ", str(args->filename)); join(args->argv, ", "); }'

The probe tracepoint:syscalls:sys_enter_execve in the above command tracks all new process(es) being exec'd.

The builtin method join(ARRAY, DELIM) concatenates the string ARRAY separated by the specified DELIM string.

In terminal T2, execute the following command(s):

$ ping -c 3 google.com

$ clear

The following would be the output on the terminal T1:

/usr/bin/ping => ping, -c, 3, google.com /usr/bin/clear => clear

For the third example, we would like to trace the number of bytes read by all the process(es) in the system. Any type of a read operation has to complete and end in the eBPF hook (tracepoint) call sys_exit_read. To find the details of the arguments of this system call tracepoint, execute the following command in terminal T1:

$ sudo bpftrace -v -l 'sudo bpftrace -v -l 'tracepoint:syscalls:sys_exit_read''

The following would be the typical output:

tracepoint:syscalls:sys_exit_read int __syscall_nr; long ret;

From the Output.17 above, one can get the number of bytes for each read via ret.

In terminal T1, execute the following command:

$ sudo bpftrace -e 'tracepoint:syscalls:sys_exit_read /args->ret > 0/ { @[comm] = sum(args->ret); }'

The special builtin symbol @ represents the BPF map data structure, which is nothing more than an associative array (or hashtable). The square brackets [] with the variable comm indicates we are using the process name as the key in the associative array to track the bytes read.

The builtin method sum(VALUE) performs the summation with the specified VALUE.

Wait for about 10 seconds and then press CTRL-C to stop the bpftrace probe and the following would be the output on the terminal T1:

... SNIP ... @[NetworkManager]: 8 @[systemd-journal]: 8 @[ibus-extension-]: 8 @[thunderbird]: 11 @[caja]: 96 @[ibus-daemon]: 112 @[mate-terminal]: 140 @[pulseaudio]: 4281 @[code]: 305015

In the Output.18, '... SNIP ...' means we have trimmed the output to display only a handful for brevity.

For the final example, we would like to trace the time spent in the reads by all the process(es) in the system and display them as a histogram across various read sizes.

In terminal T1, execute the following command:

$ sudo bpftrace -e 'tracepoint:syscalls:sys_enter_read { @start[tid] = nsecs; } tracepoint:syscalls:sys_exit_read / @start[tid] / { @times = hist(nsecs - @start[tid]); delete(@start[tid]); }'

Notice the syntax used. One can have many probes defined in a single line just as we did above for the two tracepoints - tracepoint:syscalls:sys_enter_read and tracepoint:syscalls:sys_exit_read respectrively.

The builtin variable nsecs indicates the current time in nanoseconds. The builtin variable tid indicates the kernel thread ID. The builtin method hist(VALUE) generates a histogram on log2 scale. The builtin method delete(MAP[KEY]) deletes the value for the specified map element.

Wait for about 10 seconds and then press CTRL-C to stop the bpftrace probe and the following would be the output on the terminal T1:

@start[10633]: 8829751959197 @times: [512, 1K) 116 |@ | [1K, 2K) 3143 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@| [2K, 4K) 1356 |@@@@@@@@@@@@@@@@@@@@@@ | [4K, 8K) 182 |@@@ | [8K, 16K) 13 | | [16K, 32K) 25 | | [32K, 64K) 4 | | [64K, 128K) 1 | | [128K, 256K) 0 | | [256K, 512K) 1 | | [512K, 1M) 2 | | [1M, 2M) 1 | |

References