| PolarSPARC |

Introduction to Linear Algebra - Part 5

| Bhaskar S | 03/04/2022 |

In Part 4 of this series, we explored the concept on Eigen decomposition and the Power of a matrix. In this FINAL part, we will explore the concept of Rank of a Matrix and Singular Value Decomposition.

Rank of a Matrix

The Rank of a Matrix is a single number associate with a matrix that indicates the maximum number of Linearly Independent column or row vectors of the matrix.

If $r$ is the rank of a matrix $\textbf{A}$ of dimesnsion M x N, then $0 \le r \le min(M, N)$.

The rank of the matrix $\begin{bmatrix} 1 & 0 & 2 \\ 2 & 1 & 0 \\ 3 & 2 & 1 \end{bmatrix}$ is 3 since each of the column (or row) vectors is linearly independent, meaning, they cannot be created using a linear combination of other column (or row) vectors.

The rank of the matrix $\begin{bmatrix} 1 & 3 & 2 \\ 2 & 6 & 0 \\ 4 & 12 & 1 \end{bmatrix}$ is 2, since the second column vector is a linear combination of the first column vector.

The rank of the matrix $\begin{bmatrix} 1 & 1 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{bmatrix}$ is 1.

The rank of the matrix $\begin{bmatrix} 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix}$ is 0.

Singular Value Decomposition

Eigen decomposition only works for a square matrix, but in the real-world not all matrices are square; they are rectangular. This is where Singular Value Decomposition comes in handy.

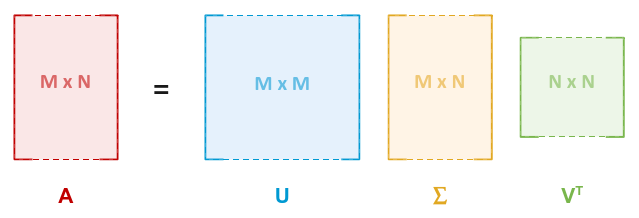

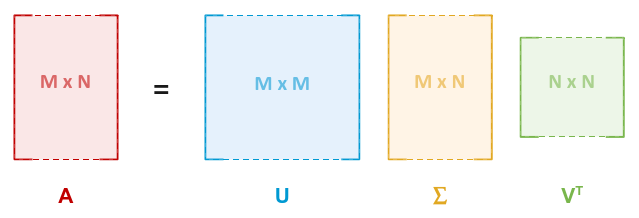

The purpose of Singular Value Decomposition (often referred to as SVD) is to decompose a matrix $\textbf{A}$ of dimension M x N into three matrices $\textbf{U}$, $\Sigma$, and $\textbf{V}^T$ such that the following condition is satisfied:

$\textbf{A} = \textbf{U} \Sigma \textbf{V}^T$

where

the matrix $\textbf{U}$, referred to as the Left Singular vectors matrix, is an ORTHOGONAL matrix of dimension M x M (corresponding to the M rows of $\textbf{A}$),

the diagonal matrix of scalars $\Sigma$, referred to as the Singular values matrix, is of dimension M x N,

and the matrix $\textbf{V}^T$, referred to as the Right Singular vectors matrix, is an ORTHOGONAL matrix of dimension N x N (corresponding to the N columns of $\textbf{A}$).

The following is a pictorial illustration of SVD:

Before we proceed with the process of computing SVD of a matrix, the following are some properties of Matrices:

For any matrix $\textbf{A}$, the transpose of its transpose, is itself. In other words, $(\textbf{A}^T)^T = \textbf{A}$

The transpose of a DIAGONAL matrix $\textbf{D}$ is itself. That is $\textbf{D}^T = \textbf{D}$

The transpose of an ORTHOGONAL matrix $\textbf{A}$ is equal to its inverse. That is $\textbf{A}^T = \textbf{A}^{-1}$

For any matrix $\textbf{A}$, the matrix multiplication with its transpose $\textbf{A}\textbf{A}^T$ or $\textbf{A}^T \textbf{A}$ is a SQUARE matrix

Given matrices $\textbf{A}$, $\textbf{B}$, and $\textbf{C}$, the transpose of their product is equal to the product of the transpose of each of the individual matrices in the REVERSE order. In other words, $(\textbf{A}\textbf{B}\textbf{C})^T = \textbf{C}^T \textbf{B}^T \textbf{A}^T$

Given $\textbf{A} = \textbf{U} \Sigma \textbf{V}^T$.

Then, $\textbf{A}^T\textbf{A} = (\textbf{U} \Sigma \textbf{V}^T)^T \textbf{U} \Sigma \textbf{V}^T$

That is, $\textbf{A}^T\textbf{A} = (\textbf{V}^T)^T \Sigma^T \textbf{U}^T \textbf{U} \Sigma \textbf{V}^T = \textbf{V} \Sigma \textbf{U}^T \textbf{U} \Sigma \textbf{V}^T$

Since the matrix $\textbf{U}$ is orthogonal, $\textbf{U}^T\textbf{U} = \textbf{I}$

That is, $\textbf{A}^T\textbf{A} = \textbf{V} \Sigma \textbf{I} \Sigma \textbf{V}^T$

Or, $\textbf{A}^T\textbf{A} = \textbf{V} \Sigma^2 \textbf{V}^T$

Notice that the right-hand size of the above equation is that of eigen decomposition.

Therefore, the matrix $\Sigma^2$ is the diagonal matrix of SQUARED eigenvalues of the matrix $\textbf{A}^T\textbf{A}$ and the matrix $\textbf{V}$ is the matrix of the eigenvectors of the matrix $\textbf{A}^T\textbf{A}$.

We now have the matrices $\textbf{V}$ and $\Sigma$. The only missing piece is the matrix $\textbf{U}$.

Next, $\textbf{A}\textbf{A}^T = \textbf{U} \Sigma \textbf{V}^T (\textbf{U} \Sigma \textbf{V}^T)^T$

That is, $\textbf{A}\textbf{A}^T = \textbf{U} \Sigma \textbf{V}^T (\textbf{V}^T)^T \Sigma^T \textbf{U}^T = \textbf{U} \Sigma \textbf{V}^T \textbf{V} \Sigma \textbf{U}^T$

Since the matrix $\textbf{V}$ is orthogonal, $\textbf{V}^T\textbf{V} = \textbf{I}$

That is, $\textbf{A}\textbf{A}^T = \textbf{U} \Sigma \textbf{I} \Sigma \textbf{U}^T$

Or, $\textbf{A}\textbf{A}^T = \textbf{U} \Sigma^2 \textbf{U}^T$

Notice that the right-hand size of the above equation is that of eigen decomposition. The matrix $\textbf{U}$ is the matrix of the eigenvectors of the matrix $\textbf{A}\textbf{A}^T$.

With this, we now have all the 3 matrices of SVD - the left singular vectors matrix $\textbf{U}$, the singular values matrix $\Sigma$, and the right singular vectors matrix $\textbf{V}^T$.

Let us look at an example to understand singular value decomposition.

| Example-1 | Find the three SVD matrices $\textbf{U}$, $\Sigma$, and $\textbf{V}^T$ for the matrix: $\textbf{A} = \begin{bmatrix} 1 & -1 & 3 \\ 3 & 1 & 1 \end{bmatrix}$ |

|---|---|

|

Given $\textbf{A} = \begin{bmatrix} 1 & -1 & 3 \\ 3 & 1 & 1 \end{bmatrix}$. Then, $\textbf{A}^T = \begin{bmatrix} 1 & 3 \\ -1 & 1 \\ 3 & 1 \end{bmatrix}$ Next, $\textbf{A}^T\textbf{A} = \begin{bmatrix} 1 & 3 \\ -1 & 1 \\ 3 & 1 \end{bmatrix} \begin{bmatrix} 1 & -1 & 3 \\ 3 & 1 & 1 \end{bmatrix}$ That is, $\textbf{A}^T\textbf{A} = \begin{bmatrix} 1+9 & -1+3 & 3+3 \\ -1+3 & 1+1 & -3+1 \\ 3+3 & -3+1 & 9+1 \end{bmatrix} = \begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix}$ To find the eigenvalues for the matrix $\textbf{A}^T\textbf{A}$, we need to solve for $\lvert \textbf{A}^T\textbf{A} - \lambda\textbf{I}\rvert = 0$. That is, $\begin{vmatrix} \begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix} - \lambda \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix} \end{vmatrix} = 0$ Or, $\begin{vmatrix} \begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix} - \begin{bmatrix} \lambda & 0 & 0 \\ 0 & \lambda & 0 \\ 0 & 0 & \lambda \end{bmatrix} \end{vmatrix} = 0$ Or, $\begin{vmatrix} 10-\lambda & 2 & 6 \\ 2 & 2-\lambda & -2 \\ 6 & -2 & 10-\lambda \end{vmatrix} = 0$ We know the determinant of $\begin{vmatrix} a & b & c \\ d & e & f \\ g & h & i \end{vmatrix} = a \begin{vmatrix} e & f \\ h & i \end{vmatrix} - b \begin{vmatrix} d & f \\ g & i \end{vmatrix} + c \begin{vmatrix} d & e \\ g & h \end{vmatrix} = a (ei - fh) - b (di - fg) + c (dh - eg)$ That is, $(10 - \lambda)[(2-\lambda)(10-\lambda)-4] - 2[2(10-\lambda)+12] + 6[-4-6(2-\lambda)] = 0$ Or, $(10 - \lambda)[(16-12\lambda+{\lambda}^2] - 2[-2\lambda+32] + 6[6\lambda-16] = 0$ Or, ${\lambda}^3 - 22{\lambda}^2 = 96\lambda = 0$ The above cubic equation can be written as $\lambda({\lambda}^2 - 22\lambda + 96) = 0$ That is, $\lambda(\lambda - 6)(\lambda - 16) = 0$ Hence, the eigenvalues for the matrix $\textbf{A}^T\textbf{A}$ are $\lambda = 16$, $\lambda = 6$, and $\lambda = 0$ in the descending order. Note that the eigenvalues of the matrix $\textbf{A}^T\textbf{A}$ are SQUARED values. Hence, to find the diagonal matrix of the singular values $\Sigma$, we need to find their respective square roots. In other words, $\Sigma = \begin{bmatrix} \sqrt{16} & 0 & 0 \\ 0 & \sqrt{6} & 0 \end{bmatrix}$ ** FACT **The number of non-zero singular values equals the rank of the matrix. Hence the rank of the matrix A is 2. Therefore, the diagonal matrix $\Sigma$ for the SVD is $\begin{bmatrix} 4 & 0 & 0 \\ 0 & 0.2449 & 0 \end{bmatrix}$ We also know the relationship between eigenvalues and eigenvectors: $\textbf{A}\vec{v} = \lambda\vec{v}$ For $\lambda = 16$, $\begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix} \begin{bmatrix} x \\ y \\ z \end{bmatrix} = 16\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ That is, $\begin{align*} & 10x + 2y + 6z = 16x \\ & 2x + 2y - 2z = 16y \\ & 6x - 2y + 10z = 16z \end{align*}$ Rearranging terms, $\begin{align*} & 3x - y - 3z = 0 \\ & x - 7y - z = 0 \\ & 3x - y - 3z = 0 \end{align*}$ Solving for equations, we get $y = 0$ By substitution, we get $x = z$. Choosing $z = 1$, we get $x = 1$, and therefore the eigenvector would be $\vec{v} = \begin{bmatrix} 1 \\ 0 \\ 1 \end{bmatrix}$ Converting the eigenvector to a unit vector, we get the eigenvector would be $\vec{v} = \begin{bmatrix} 0.7071 \\ 0 \\ 0.7071 \end{bmatrix}$ Therefore, for the eigenvalue $\lambda = 16$, the corresponding eigenvector is $\begin{bmatrix} 0.7071 \\ 0 \\ 0.7071 \end{bmatrix}$ For $\lambda = 6$, $\begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix} \begin{bmatrix} x \\ y \\ z \end{bmatrix} = 6\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ That is, $\begin{align*} & 10x + 2y + 6z = 6x \\ & 2x + 2y - 2z = 6y \\ & 6x - 2y + 10z = 6z \end{align*}$ Rearranging terms, $\begin{align*} & 2x + y + 3z = 0 \\ & x - 2y - z = 0 \\ & 3x - y + 2z = 0 \end{align*}$ Solving for equations, we get $x = y$ Choosing $y = 1$, we get $x = 1$, and by substitution, we get $z = -1$, and therefore the eigenvector would be $\vec{v} = \begin{bmatrix} 1 \\ 1 \\ -1 \end{bmatrix}$ Converting the eigenvector to a unit vector, we get the eigenvector would be $\vec{v} = \begin{bmatrix} 0.5774 \\ 0.5774 \\ -0.5774 \end{bmatrix}$ Therefore, for the eigenvalue $\lambda = 6$, the corresponding eigenvector is $\begin{bmatrix} 0.5774 \\ 0.5774 \\ -0.5774 \end{bmatrix}$ Finally, for $\lambda = 0$, $\begin{bmatrix} 10 & 2 & 6 \\ 2 & 2 & -2 \\ 6 & -2 & 10 \end{bmatrix} \begin{bmatrix} x \\ y \\ z \end{bmatrix} = 0\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ That is, $\begin{align*} & 10x + 2y + 6z = 0 \\ & 2x + 2y - 2z = 0 \\ & 6x - 2y + 10z = 0 \end{align*}$ Rearranging terms, $\begin{align*} & 5x + y + 3z = 0 \\ & x + y - z = 0 \\ & 3x - y + 5z = 0 \end{align*}$ Solving for equations, we get $x = -\frac{1}{2}y$ Choosing $y = -2$, we get $x = 1$, and by substitution, we get $z = -1$, and therefore the eigenvector would be $\vec{v} = \begin{bmatrix} 1 \\ -2 \\ -1 \end{bmatrix}$ Converting the eigenvector to a unit vector, we get the eigenvector would be $\vec{v} = \begin{bmatrix} 0.4082 \\ -0.8165 \\ -0.4082 \end{bmatrix}$ Therefore, for the eigenvalue $\lambda = 0$, the corresponding eigenvector is $\begin{bmatrix} 0.4082 \\ -0.8165 \\ -0.4082 \end{bmatrix}$ The matrix $\textbf{V}$ for the SVD of $\textbf{A}$ is the concatenation of the eigenvectors of $\textbf{A}^T \textbf{A}$ = $\begin{bmatrix} 0.7071 & 0.5774 & 0.4082 \\ 0 & 0.5774 & -0.8165 \\ 0.7071 & -0.5774 & -0.4082 \end{bmatrix}$ Therefore, The matrix $\textbf{V}^T$ for the SVD is $\begin{bmatrix} 0.7071 & 0 & 0.7071 \\ 0.5774 & 0.5774 & -0.5774 \\ 0.4082 & -0.8165 & -0.4082 \end{bmatrix}$ Next, $\textbf{A}\textbf{A}^T = \begin{bmatrix} 1 & -1 & 3 \\ 3 & 1 & 1 \end{bmatrix} \begin{bmatrix} 1 & 3 \\ -1 & 1 \\ 3 & 1 \end{bmatrix}$ That is, $\textbf{A}\textbf{A}^T = \begin{bmatrix} 1+1+9 & 3-1+3 \\ 3-1+3 & 9+1+1 \end{bmatrix} = \begin{bmatrix} 11 & 5 \\ 5 & 11 \end{bmatrix}$ To find the eigenvalues for the matrix $\textbf{A}\textbf{A}^T$, we need to solve for $\lvert \textbf{A}\textbf{A}^T - \lambda\textbf{I}\rvert = 0$. That is, $\begin{vmatrix} \begin{bmatrix} 11 & 5 \\ 5 & 11 \end{bmatrix} - \lambda \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix} \end{vmatrix} = 0$ Or, $\begin{vmatrix} \begin{bmatrix} 11 & 5 \\ 5 & 11 \end{bmatrix} - \begin{bmatrix} \lambda & 0 \\ 0 & \lambda \end{bmatrix} \end{vmatrix} = 0$ Or, $\begin{vmatrix} 11-\lambda & 5 \\ 5 & 11-\lambda \end{vmatrix} = 0$ We know the determinant of $\begin{vmatrix} a & b \\ c & d \end{vmatrix} = ad - bc$ That is, $(11 - \lambda)(11 - \lambda) - 25 = 0$ Or, $121 -22\lambda + {\lambda}^2 - 25 = 0$ Or, ${\lambda}^2 - 22\lambda + 96 = 0$ The above quadratic equation can be written as $(\lambda - 6)(\lambda - 16) = 0$ Hence, the eigenvalues are $\lambda = 16$ and $\lambda = 6$ in the descending order. We also know the relationship between eigenvalues and eigenvectors: $\textbf{A}\vec{v} = \lambda\vec{v}$ For $\lambda = 16$, $\begin{bmatrix} 11 & 5 \\ 5 & 11 \end{bmatrix} \begin{bmatrix} x \\ y \end{bmatrix} = 16\begin{bmatrix} x \\ y \end{bmatrix}$ That is, $\begin{align*} & 11x + 5y = 16x \\ & 5x + 11y = 16y \end{align*}$ Or, $x = y$ Choosing $y = 1$, we get $x = 1$, and therefore the eigenvector would be $\vec{v} = \begin{bmatrix} 1 \\ 1 \end{bmatrix}$ Converting the eigenvector to a unit vector, we get the eigenvector would be $\vec{v} = \begin{bmatrix} 0.7071 \\ 0.7071 \end{bmatrix}$ Therefore, for the eigenvalue $\lambda = 16$, the corresponding eigenvector is $\begin{bmatrix} 0.7071 \\ 0.7071 \end{bmatrix}$ Similarly, for $\lambda = 6$, $\begin{bmatrix} 11 & 5 \\ 5 & 11 \end{bmatrix} \begin{bmatrix} x \\ y \end{bmatrix} = 6\begin{bmatrix} x \\ y \end{bmatrix}$ That is, $\begin{align*} & 11x + 5y = 6x \\ & 5x + 11y = 6y \end{align*}$ Or, $x = -y$ Choosing $y = -1$, we get $x = 1$, and therefore the eigenvector would be $\vec{v} = \begin{bmatrix} 1 \\ -1 \end{bmatrix}$ Converting the eigenvector to a unit vector, we get the eigenvector would be $\vec{v} = \begin{bmatrix} 0.7071 \\ -0.7071 \end{bmatrix}$ Therefore, for the eigenvalue $\lambda = 4$, the corresponding eigenvector is $\begin{bmatrix} 0.7071 \\ -0.7071 \end{bmatrix}$ The matrix $\textbf{U}$ for the SVD of $\textbf{A}$ is the concatenation of the eigenvectors of $\textbf{A} \textbf{A}^T = \begin{bmatrix} 0.7071 & 0.7071 \\ 0.7071 & -0.7071 \end{bmatrix}$ SOLUTION: The 3 matrices for the SVD of matrix $\textbf{A}$ are as follows: $\textbf{U} = \begin{bmatrix} 0.7071 & 0.7071 \\ 0.7071 & -0.7071 \end{bmatrix}$ $\Sigma = \begin{bmatrix} 4 & 0 & 0 \\ 0 & 0.2449 & 0 \end{bmatrix}$ $\textbf{V}^T = \begin{bmatrix} 0.7071 & 0 & 0.7071 \\ 0.5774 & 0.5774 & -0.5774 \\ 0.4082 & -0.8165 & -0.4082 \end{bmatrix}$ |

|

To get a better understanding of SVD, let us break down $\textbf{A} = \textbf{U} \Sigma \textbf{V}^T$.

One can visualize the matrix $\textbf{U}$ of dimension M x M as a concatenation of N column vectors $\vec{u}$. In other words $\textbf{U} = \begin{bmatrix} \vec{u}_1 \: \vert \: \vec{u}_2 \: \vert \: ... \: \vert \: \vec{u}_N \end{bmatrix}$.

Similarly, the matrix $\textbf{V}^T$ of dimension N x N as a concatenation of N column vectors $\vec{v}^T$. In other words, $\textbf{V}^T = \begin{bmatrix} \vec{v}^T{_1} \: \vert \: \vec{v}^T{_2} \: \vert \: ... \: \vert \: \vec{v}^T{_M} \end{bmatrix}$.

The matrix $\Sigma$ is of the same dimension as $\textbf{A}$ M x N and is the diagonal matrix of singular values. In other words, $\Sigma = \begin{bmatrix} \sigma_1 & 0 & ... & 0 \\ 0 & \sigma_2 & ... & 0 \\ 0 & 0 & \sigma_N & 0 \end{bmatrix}.$

Then, $\textbf{A} = \textbf{U} \Sigma \textbf{V}^T = \begin{bmatrix} \vec{u}_1 \: \vert \: \vec{u}_2 \: \vert \: ... \: \vert \: \vec{u}_N \end{bmatrix} \begin{bmatrix} \sigma_1 & 0 & ... & 0 \\ 0 & \sigma_2 & ... & 0 \\ 0 & 0 & \sigma_N & 0 \end{bmatrix} \begin{bmatrix} \vec{v}^T{_1} \: \vert \: \vec{v}^T{_2} \: \vert \: ... \: \vert \: \vec{v}^T{_M} \end{bmatrix} = \vec{u}_1\sigma_1\vec{v}^T{_1} + \vec{u}_2\sigma_2\vec{v}^T{_2} + ... + \vec{u}_N\sigma_N\vec{v}^T{_N}$.

Or, $\textbf{A} = \sum_{i=1}^{N} \vec{u}_i\sigma_i\vec{v}^T{_i}$.

Intuitively, one can think of the matrix $\textbf{A}$ as a combination of a series of smaller atomic matrices $\vec{u}_i \sigma_i \vec{v}^T{_i}$.

In the real-world use-cases, what this means is that for a very large dimension matrix, if its rank is $r$, then we will only need upto the $r$ number of those smaller atomic vectors to recreate the original matrix. This is akin to compression (reduction in dimension). For example, one could use SVD for compression of media files such as audio, images, video, etc.

Here is the link to the Jupyter Notebook that provides an hands-on demo for the entire series on Linear Algebra.

References

Introduction to Linear Algebra - Part 4

Introduction to Linear Algebra - Part 3